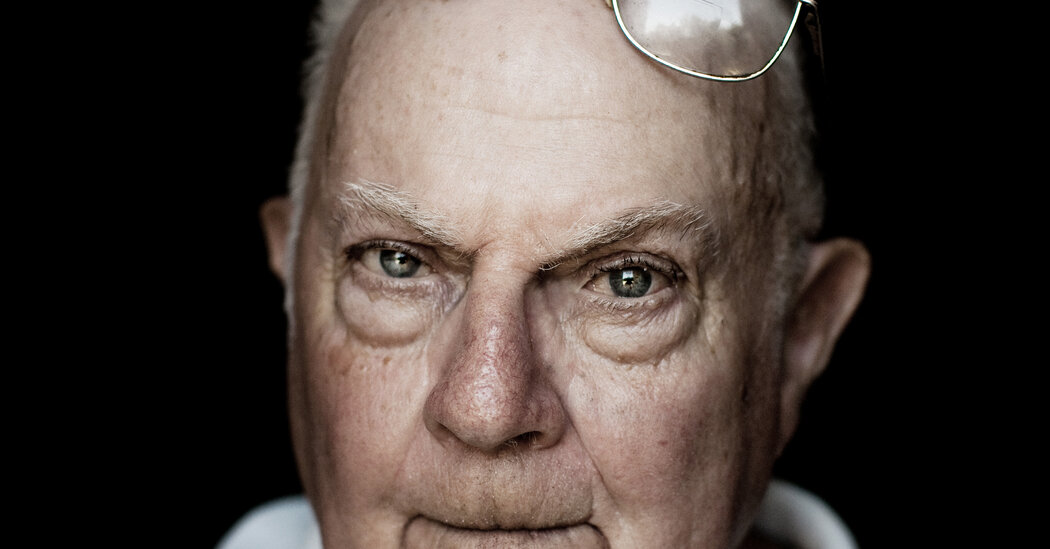

The Lives Lives They Lived Lived Remembering some of the artists, innovators and thinkers we lost in the past year. Donald Triplett B. 1933 Triplett B. 1933 After being sent away at 3 years old, he was the first person diagnosed with autism. By the time he was 5, words tumbled out of his mouth like cryptic poetry. “Through the dark clouds shining.” “Dahlia, Dahlia, Dahlia.” “Say, ‘If you drink to there, I’ll laugh and I’ll smile.’” In perfect pitch, he sang Christmas carols before he was 2. He spun numbers, memorizing and reciting them and, later, multiplying and cubing them faster than you could press the equals sign on a calculator. They were concrete, they were solvable, unlike so much of life. Mary and Beamon Triplett recognized their son Donald’s talents. But they worried about him. He rarely cried for his mother and preferred solitude to her hugs. He often didn’t give his father a glance when he arrived home (but squealed with delight at spinning pans, pot lids and blocks). And he needed uniformity: If someone moved the furniture, he had a tantrum. Regularly, he paced across the room, shaking his head side to side while whispering or humming a three-note tune, as if he had composed a language that he alone understood. This was the 1930s, and doctors had no diagnosis for children like Donald. Instead, they had labels: imbecile, moronic, feebleminded. And mothers were often blamed. (Isn’t it always so?) One physician told Mary she had “overstimulated” Donald and suggested, as many doctors would have, that she send her son away. The family would be better off. In the summer of 1937, Mary and Beamon drove their almost 4-year-old son to an institution called the Preventorium in, of all places, Sanatorium, Miss. Donald was the youngest child there. Relegated to white bloomers, a white top and bare feet, he engaged with toys, not other kids. His eating improved — his food refusal was one reason Mary and Beamon agreed to send him — but perhaps only because he couldn’t leave the table until his plate was clean. On their twice-monthly allowed visits, his parents must have seen what others noted. The light in Donald’s eyes had faded. The smile he radiated, gone. This was a decade before Dr. Benjamin Spock told parents: “Trust yourself. You know more than you think you do.” Mary knew. Against the Preventorium director’s advice, the couple brought him home about a year after they dropped him off. It was one of many ways Donald was lucky. His mother was loving and determined, and she was from a prominent family in Forest, Miss. Her father was a founder of a local bank. Beamon was a top lawyer. They had money, and they had connections. In his law office soon after Donald came home, Beamon dictated a 33-page letter about his son’s medical and psychological history, his behaviors, aptitudes and challenges, to Dr. Leo Kanner, one of the world’s leading child psychiatrists, at Johns Hopkins Hospital. Kanner met with the Tripletts and spent two weeks observing Donald, whose history and constellation of behaviors didn’t fit anything from the textbooks or the patients he had treated. Over the next few years, Kanner saw 10 more children who were similar to Donald in their “powerful desire for aloneness and sameness,” Kanner wrote. His article on what became known as autism was published in April 1943. Donald T. was Case No. 1: the first child formally diagnosed with the disorder. Back home, Donald’s mother had pushed the public elementary school to allow him to attend, as Caren Zucker and John Donvan detail in their book, “In a Different Key: The Story of Autism.” He eventually graduated from high school and went on to a nearby college. That was in stark contrast to the outcomes for many others at the time. There was no federal legislation mandating that children with disabilities had the right to public education until the 1970s. It would take years longer for the public to understand, through writers like Temple Grandin, that many people with autism had particular talents and intelligences and that the disorder was not rare. In the United States today, about one in 36 children have been identified with autism spectrum disorder, according to the C.D.C. Triplett lived out his life in Forest, where he held various jobs at his grandfather’s bank — counting checks, sorting mail, looking up customer balances. To employees, some who had known Triplett most of their lives, he was smart, kind. A jokester with some quirks. They didn’t expect him to speak more than a sentence or two at a time. They knew he would rarely meet their eyes. But he connected in other ways, including by giving them nicknames. Even after he retired, every weekday he returned to the bank at 2 p.m., stopping by each person’s desk. “Hey there, Parker the Barker,” he said with a wave. “Hi, Celestial Celeste.” The routine probably comforted him. And it pleased his friends, who came to expect Donald’s daily rounds of delight. Maggie Jones is a contributing writer for the magazine and teaches writing at the University of Pittsburgh. She has been a Neiman fellow at Harvard University and a Senior Ochberg fellow at the Dart Center for Journalism and Trauma at Columbia University. Sinead O’Connor B. 1966 O’Connor B. 1966 Uncovering the unlikely story behind the singer’s first album. To say that Sinead O’Connor never quite regained the musical heights of her 1987 debut album, “The Lion and the Cobra,” is not to slight the rest of her output, which contained jewels. There is no getting back to a record like that first one. It was in some sense literally scary: The label had to change the original cover art, which showed a bald O’Connor hissing like a banshee cat, for the American release. In the version we saw, she looks down, arms crossed, mouth closed, vulnerable. The music had both sides of her in it. A fuzziness has tended to hang over the question of who produced “The Lion and the Cobra.” The process involved some drama. O’Connor clashed with the label and dropped her first producer, Mick Glossop, highly respected and the person the label wanted. In the end, she produced the album largely by herself. But not entirely. There was a co-producer, an Irish engineer named Kevin Moloney, who worked on the first five U2 albums and Kate Bush’s “Hounds of Love.” He and O’Connor went to school at the same time in the Glenageary neighborhood of Dublin, where he attended an all-boys Catholic academy next to her all-girls Catholic school. But Moloney didn’t know O’Connor then, though they took the same bus. In Asheville, N.C., this fall, Moloney sat in the control room of Citizen Studios, where he is the house producer, and hit play on “The Lion and the Cobra.” The first song is a ghost story called “Jackie.” A woman sings of her lover, who has failed to return from a fishing expedition. You’re on deep Irish literary sod, the western coast and the islands. It’s the lament of Maurya in J.M. Synge’s play “Riders to the Sea,” grieving for all the men the ocean has taken from her, except that the creature singing through O’Connor will not accept death. “He’ll be back sometime,” she assures the men who deliver the news, “laughing at you.” At the end, her falsetto howls above the feedback. She starts the song as a plaintive young widow and ends it as a demon. “Gets the old hairs going up,” Moloney said. “Where did she get that?” I asked. “Those different registers?” “It was all in her head,” he said. “She had these personas.” And the words? Were they from an obscure Irish shanty she found in an old newspaper? “Oh, no, she wrote it herself,” Moloney said. “Her lyrics were older than she was.” Moloney’s connection with O’Connor came through U2’s guitarist, the Edge (David Evans). In late 1985, the band was between albums, so Evans did a solo project, scoring a film. He recruited O’Connor — who had just been signed to the English label Ensign Records — to sing on one tune, and Moloney engineered the session. O’Connor was 18, with short dark hair. Ensign put her together with Glossop, who had just co-produced the Waterboys’ classic album “This Is the Sea.” But she spurned the results: “Too pretty.” Glossop remembered O’Connor as reluctant to speak her mind in the studio, leading to a situation where small differences of opinion weren’t being addressed, leaving her alienated from the material. With characteristic careerist diplomacy, she called Glossop a “[expletive] ol’ hippie” (and derided U2, who possessed some claim to having discovered her, as fake rebels making “bombastic” music). Glossop recalled that when he ran into her at a club a couple of years later, she hugged him and apologized — “which was a nice gesture,” he told me. Nobody has ever heard those first, abandoned tracks from “The Lion and the Cobra.” “They put a big sound, a band sound around her,” Moloney said, “and she was disappearing in it.” Glossop remembered it slightly differently. “She had a rapport with her band,” he said, “and I recorded them as a band. But she was turning into a solo artist.” She was also pregnant, unbeknownst to Glossop (“It would have been nice to know,” he said). The father was the drummer in the band, John Reynolds, whom she had known for a month when they conceived. According to O’Connor’s autobiography, “Rememberings,” the label pressured her to have an abortion, sending her to a doctor who lectured her on how much money the company had invested in her. O’Connor not only insisted on keeping the baby; she also told the label that if it forced her to put out its version of the record, she would walk. “They eventually caved,” Moloney said. “They told her, ‘Make it your way.’” But with a “limited budget.” That’s when she reached out to Moloney, in the spring of 1986, saying she needed someone she trusted. He started taking day trips to Oxford, where she was holed up in a rental house. “We were in the living room,” Moloney said. “A bunch of couches and a bunch of underpaid, underloved musicians who were struggling big time.” “There was a bit of a little communal hub,” he said, “always a few joints going around the room. Sinead loved her ganja. A lot of talking, and then somebody would start to play, and people would pick up instruments. And Sinead was, like, captain of the ship.” When they got into the studio in London, Moloney turned the earlier, band-focused approach inside out like a sock. O’Connor’s voice was allowed to dictate. The musicians worked around it. For the song “Jackie,” he said, “Sinead wanted to do all of those guitar parts herself. And she wanted to do it really late at night, when everybody else was gone home. She didn’t feel good about her guitar playing. I got her to do this really distorted big sound, and then we layered it and layered it. It became this sort of seething. She was like, ‘Look at me — I’m Jimi Hendrix.’” The most difficult challenge in recording O’Connor, he said, was finding a microphone that could handle her dynamic range, with those whisper-to-scream effects she was famous for. “Once we figured out the right way of capturing her vocals” — an AKG C12 vintage tube mic — “she did it really fast.” I must have looked amazed — the vocals are so theatrical and swooping, O’Connor’s pitch so precise, that I had envisioned endless takes — because Moloney said, as if to settle doubts, “Within a couple of takes, it was done.” The jangly guitar opening of the third track, “Jerusalem,” played. “I remember hearing her play this for the first time,” Moloney said. “I got it, knowing her background.” O’Connor was abused — psychologically, physically, sexually — by her mother, who died in a car accident, and by the Catholic Church. “All the systems had failed her,” Moloney said, “that were supposed to protect her.” If he was right that he heard trauma in “Jerusalem,” the song lyrics also drip with erotic angst (“I hope you do/what you said/when you swore”). It introduces the record’s main preoccupation: love and sex as they intersect with power and pain. The streams cross with greatest emotional force in the song “Troy,” one of the most beautiful and ambitious pieces of mid-1980s popular music. The track sticks out production-wise, with a powerful, surging orchestral arrangement (the product of O’Connor’s collaboration with the musician Michael Clowes, who also played keyboards on the album). There’s a moment in the song when O’Connor repeats the line, “You should’ve left the light on.” I had never given undue thought to what it meant. Something about tortured desire: If you had left the light on, I wouldn’t have kissed you. But Moloney said it had a double meaning. When O’Connor was punished as a child and made to sleep outside in the garden shed, her mother would turn off all of the lights in the house. “There wouldn’t be a light on for her,” Moloney said. O’Connor gave birth to her son, Jake, just weeks after Moloney finished the mixes. She told Glasgow’s Daily Record that although the baby had kicked when she sang in the studio, he slept now when the record came on. “She was so happy,” Moloney said with tears in his eyes. “She said: ‘Oh, my God, I can’t believe I went through all of that and I’ve arrived here with a record I love. Also, here’s my baby!’ She had two babies in one year.” John Jeremiah Sullivan is a contributing writer for the magazine who lives in North Carolina, where he co-founded the nonprofit research collective Third Person Project. Darryl Beardall B. 1936 Beardall B. 1936 His high school track coach told him to run until he got tired. He never got tired. Darryl Beardall was a high school sophomore in Santa Rosa, Calif., when he tried out for the track team. The coach told him to run around the track until he got tired. He ran 48 laps — 12 miles — and reported back to the coach. “I’m not tired,” he said. Beardall kept running. He ran in the mornings. He ran in the evenings. He ran to his chores on his family’s ranch. As a teenager, Beardall was warned about the damage he would do to his feet. “The doctor told me, ‘If you don’t stop running, you’ll be a cripple for the rest of your life,’” Beardall told The Deseret News a decade ago. “That was 1955.” For decades, he ran 20 miles a day, six days a week, recording his distances in handwritten logs. He rested on Sundays, a nod to his faith in the Church of Jesus Christ of Latter-day Saints — unless there was a race, which there often was. He ran thousands of races, mostly in California, from community 5Ks to 100-mile endurance events, often in a pair of worn Nikes. People who unofficially track such things, like the former Runner’s World executive editor Amby Burfoot, believe that Beardall might have run more miles — somewhere around 300,000 — than anyone in recorded history. He ran his first marathon in 1959. That year, the Army diagnosed Beardall with an enlarged heart and told his parents that his heart might burst. His heart did not burst. He kept running. He was a strong and competitive runner in his prime, but not an elite one. He ran at Santa Rosa Junior College and later at Brigham Young University. He competed in the marathon at the Olympic trials four times, with three Top 10 finishes (but no Olympic Games). He ran roughly 200 marathons, mostly close to home, some of them annually for dozens of years. He won a few. Beardall ran before it was cool. He wore old running singlets and bought shoes on clearance. He bridged the age between recreational running as a curiosity and running as a cultural movement. (The first New York City Marathon was in 1970, and Nike released its first running shoe in 1972.) He stuck mostly to local, familiar races. He ran forever but rarely ventured far because of a lack of time or money. Beardall was a postal carrier before a three-decade career with the Northwestern Pacific Railroad, mostly as a telegrapher at stations in Petaluma and Healdsburg in California’s wine country. During lunch breaks and after work, he would run along the shoulders of roads or traipse through the surrounding hills. In Northern California running circles, he was known as a sturdy, smiling, balding man in perpetual motion. He was best known for his association with the Dipsea, the rugged and beloved trail race in which the best runners start at the back of the pack. He finished ninth in his first attempt, as a high school senior. He would go on to compete in it 59 times, winning twice in the 1970s. He met his future wife, Lynne Tanner, on a blind date at the Dipsea finish line. They were married for more than 40 years and had five children. As Beardall’s children grew, they joined him on training runs or worked on his race-day crew. But they never quite understood their father’s compulsion to run. To where? From what? Beardall just ran, the way a monk whispers vespers. He never explained it. “I just take it one day at a time,” he told The Deseret News in 2013. “I just keep on chugging.” By then, Burfoot was tracking what he called “an esoteric but interesting” statistic: lifetime miles. “All the people who have logged incredible mileage just represent the metaphor of running, which is endurance, consistency, discipline,” he says. In 2012, Burfoot placed Beardall atop the list, based on Beardall’s estimate that he had run more than 300,000 miles. Today the unofficial recordkeeper is Steve DeBoer, who believes that about a dozen people have reached 200,000 miles. On DeBoer’s list, Beardall ended with 294,738 lifetime running miles. DeBoer docked Beardall 10 percent because some early logs went missing, but he suspects that Beardall topped 300,000. “It’s far and away higher than anybody else that we’re aware of,” DeBoer says. For 65 years, Beardall averaged about 4,500 miles per year — about 12 miles a day, on average. In 2013, the year Beardall turned 77, he ran more than 3,000 miles. Three years later, he broke his hip, but that didn’t deter him. This past July, Beardall made his 50th appearance at the Deseret News Marathon, in his native Utah, an event that he won in 1972 and 1973. This time, instead of the marathon, he completed the 10K with a walker. John Branch has been a Times reporter since 2005, writing mostly about the fringes of sports. His most recent book is a collection of his work, called “Sidecountry.” Tina Turner B. 1939 Turner B. 1939 Her famous shape was sculpted for public consumption, but she ultimately reclaimed it for herself. Tina Turner was a human being. Flesh, and blood. This can be hard to remember amid all the iconography: the Avedon and Warhol photos, the music videos, the Acid Queen, the mesmeric Aunty Entity, the biopic, the memes. Turner’s body was on display for half a century and subject to infinite misconceptions, some of which even she bought into. “For far too long,” she wrote in March 2023, “I believed that my body was an untouchable and indestructible bastion.” It’s no wonder. Her body was an optical illusion. The fashion designer Bob Mackie said Turner had the “best body, with the longest legs, and she used it all.” A month before she died, Turner said: “I think I’m as famous for my legs as much as my voice. I only had my legs on show so much as it made it much easier to dance. Then it became part of my style. When I was younger, I never felt confident about any part of my body.” The “long” legs she called her “workhorses” were a mirage. Turner was 5-foot-4. Turner’s legs were larger than life because in her Hall of Fame heydays (she was inducted twice), men were a majority of music executives, critics and her fellow rockers. They misarticulated the dimensions of Turner’s body and soul from media platforms that told her her music was too Black to be pop and too white to be R.&B. Libidinous stereotypes leaped off the page, particularly in the 1970s. Rolling Stone described Turner as “snake-snapping.” Both Look and Playboy went with “lioness in heat.” The French magazine Maxipop used “Tina Turner Super Sex!” as its sole cover line. The rote tropes wrote themselves and functioned as carnival calls to come one, come all . Turner’s forerunners include the original rock star, Sister Rosetta Tharpe. But the clearest line is from Josephine Baker to Turner. Turner’s precursor performed her danse sauvage in Paris for years in a banana-adorned skirt. In 1975, Turner donned a thigh-baring zebra-print Mackie concoction that included a tail. The message: This is how you see my body, so I’ll flip your gaze right back at you . In 2000, it was widely reported that Turner insured her breasts and face for $790,000 each. Turner’s voice was insured for $3.2 million, and her toned and glowing legs, the ones on which she danced in high heels for more than 50 years, were covered for $3 million. While these valuations placed Turner in the company of Marlene Dietrich and the famously leggy Betty Grable, any time a price is attached to the limbs of Black people, antebellum echoes are loud. She was, after all, born Anna Mae Bullock in the basement of a segregated hospital in the sharecropping community of Nutbush, Tenn. The kind of place where, before Turner turned 1, a man named Elbert Williams was abducted and shot to death, apparently for registering Black people to vote. Along with the ruby birthmark on her right arm, a perceived stain upon Anna Mae was a rumor that perhaps she didn’t belong to her father at all. Richard was an overseer, so the family also worked an acre of their own crowded with cabbage and yams. She trudged through mud, picking sticky strawberries under her father’s watch. With muscles built on catfish and perch, she picked cotton, too, from its thorny bolls. Other token perks of Richard’s role: “nice furniture” and clean sheets on Anna Mae’s skin, always. All this early grit and grace emerge in the unique rasp of Turner’s vocals. As the British rocker Dame Skin said: “She had wonderful phrasing. The way she would drop the note and then find the point of putting the gravel in there. It’s quite a thing to have that gravel in your voice for a long time, and not destroy your vocal cords.” Richard didn’t like Anna Mae, and her mother, Zelma, didn’t want her. Desperation and necessity were the parents of her invention. “To tell the truth,” Turner said in her 1986 autobiography, “I haven’t received a real love almost ever in my life.” She tore out the tight plaits of Black girlhood and pulled her hair into rugged ponytails. “I was always up to something,” she wrote. “Running, moving.” In high school, Anna Mae ran up and down basketball courts as a forward, ran around homes as a part-time maid and by 17 she was in St. Louis running around its vibrant nightclub scene. There, she met Ike Turner. Upon realizing her singular vocals, charisma and spasms of ambition, Ike renamed Anna Mae “Tina,” inspired by “Sheena, Queen of the Jungle,” a Tarzan-esque comic-book heroine. The duo married in Tijuana in 1962, on the day she turned 23. Back in the States, where they were gigging, writing and recording, Turner’s eyes were described as “flashing,” yet she often performed behind sunglasses. From within whirls of fringe and sequin, Turner looked to be dancing on hot coals. She sang ferociously, as if for her freedom. In 1976, Turner left Ike and his cocaine and his sherry and his guns. Finally escaped how he beat her — with fists, with phones, with shoes, with hangers. She couchsurfed and earned her keep by housecleaning. Already a Grammy winner, Turner performed at trade shows in ragged costumes and stomped stages on sore feet. There were no more broken jaws, though, or hematomas on her eyes. Only a fixation on the kind of life she knew could be hers. Turner became a solo pop star at 44. From there she walked tall. After a series of spectacular world tours that broke attendance records, Turner announced that she would retire. “I’ve done enough,” she said in 2000 to an adoring crowd at Zurich’s Letzigrund Stadium. “I really should hang up my dancing shoes.” Turner finally truly retired in 2009, after her 50th-anniversary tour. In her 70s, she suffered a stroke, cancer and renal failure, and received a kidney transplant. The donor was Erwin Bach, her new husband. Bach was a German record executive 16 years her junior. The couple met for the first time at an airport in Cologne. “I instantly felt an emotional connection,” Turner wrote. “I could have ignored what I felt — I could have listened to the ghost voices in my head telling me that I didn’t look good that day, or that I shouldn’t be thinking about romance because it never ends well. Instead, I listened to my heart.” They were together for 27 years before marrying in 2013. It turns out that what love had to do with it was loving Anna Mae enough to become Tina. And loving Tina enough to show the world, and the man with whom she shared her “one true marriage,” all that might have withered to nothingness. “It’s physical/only logical,” she sang in one of her biggest hits; she might have been alluding to the grueling work of giving us her all. Turner’s body is the only part of her that is gone. Living to see eight decades, the last three in a mansion on the shore of Lake Zurich, with the birthmarks of America in the rearview, has got to bring satisfaction. It’s comforting to imagine her in an afterlife, legs their actual size, trim ankles crossed on an ottoman, eating strawberries, relishing sweetness. I think of her resting in the peace we often wish upon people but can rarely ever conjure. Danyel Smith is the Los Angeles-based author of “Shine Bright: A Very Personal History of Black Women in Pop,” chosen by Pitchfork as the best music book of 2022. Smith is also host of “Black Girl Songbook,” a podcast focused on the stories of Black women in music. Cormac McCarthy b. 1933 McCarthy b. 1933 He wrote in obscurity for decades before the public caught up to him. The cover letter was addressed to “Fiction Editor, Random House, Inc,” and sent from south of Knoxville, Tenn., in May 1962. “Gentlemen:” it began, “The enclosed manuscript is a novel of some 75,000 words. . . . I finished the final draft today and am leaving for Chicago day after tomorrow. Therefore I will not have time to re-read these pages and they come to you uncorrected. I beg your indulgence in the matter of typographical errors and hope they are few and that they will not interfere with the reading of the manuscript.” Return postage, the letter’s writer — 28-year-old Cormac McCarthy — concluded, was enclosed. No such return would be forthcoming, and no gentleman would be its first reader. A young assistant at Random House, Maxine Groffsky, thought highly of it, and sent it along with a note (“This might be good”) to the 25-year-old editor she worked under, Larry Bensky. In a feat of weirdness that no striver today should expect, Bensky read the manuscript straight away and wrote to his superior, Albert Erskine. “A strange and, I think, beautiful novel in the Southern tradition,” Bensky said, “which has confused me quite a bit on a quick first reading, but which I think is worth publishing.” Bensky assessed the book’s virtues, noting a neo-Faulknerianism that skirted imitation, extending that vein of Southern writing. “Some judicious editing,” he said, “could make this into a fine novel.” Although Erskine would become McCarthy’s dedicated champion for the next quarter century — Erskine even sent along fresh typewriter ribbons when McCarthy’s letters became all but unreadably faint — it was Bensky who went back and forth with McCarthy for a year, shepherding him through rounds of revisions before Random House bought the book, for $1,500. Another year of revisions followed, none of which would have happened without Groffsky — who quit a month after she discovered McCarthy — offering before she went the first true estimation of McCarthy’s talent. It would take only 30 years for the wider world to catch up. Since McCarthy died, on June 13, obituaries and encomia have sketched his career’s mean progress: how Random House didn’t make money on McCarthy’s first five novels, continuing to publish him because Erskine, as executive editor, insisted on it; how he had little to do with the literary world; how he lived an itinerant existence, while keeping 7,000 books in storage; how he didn’t teach, or write journalism, to support himself; how he spent those early years in near poverty, eventually getting a MacArthur fellowship in 1981. No one says McCarthy’s prose wasn’t remarkable. But it was often, by virtue of how far he was willing to go, easy to mock. He overwrote like no one else, while managing at the same time to write with such clarity of vision that he could add to the stock of available reality. The things McCarthy looked hard at looked hard at you. The lore around McCarthy metastasized when Knopf decided to heavily promote novel six, “All the Pretty Horses,” which brought him what we call “success.” Along with an excerpt, Esquire published a list of rumors about him: that he had been a ditchdigger, a truck driver, that he never spoke, etc. His author photos by Marion Ettlinger made him look ruggedly posthumous, cast in stone. In a profile in The New York Times Magazine of the era, McCarthy talks about venomous snakes and grizzly bears, as though he had briefly descended from horseback before riding off into the evening redness. But as with most great writers, the essential truth was plainer: He was a nerd for the art. In 2001, I received a letter that led me to this obvious-after-the-fact understanding. My friend, the writer Guy Davenport, included some pictures of McCarthy and him from the ’70s. McCarthy was in maroon flares and a striped tank (he’s jacked, for a writer), feathered hair and period-correct movie star sunglasses. For 20 years the two men corresponded, the very period of McCarthy’s obscurity. They talked about art and thought, from Heraclitus to Balthus, Walter Savage Landor to Ernst Mach, Ruskin to Wittgenstein. They discussed obscure books like Doughty’s “Travels in Arabia Deserta” and Eckermann’s “Conversations With Goethe.” By the late ’70s, McCarthy’s first three novels were out of print, a situation he was trying to remedy. (“An outfit called Ecco Press claims they’d like to issue my stuff in paperback. Do you know anything about them?”) In 1978, he told Davenport that he was revising “the western book;” in 1981, “still at work,” publication probably “in six or eight months.” When, four years later, that western, “Blood Meridian” — a straight-up masterpiece — did come out, it proved commercially D.O.A., selling just north of 1,000 copies. By ’87, he was one draft into “All the Pretty Horses.” Finishing took another five years. How did he hold on? “I have a peculiar trust,” he told Davenport at one point, “in the rightness of the actual.” Wyatt Mason is a contributing writer for the magazine and teaches at Bard College. He last wrote about the novelist Sigrid Nunez. Jerry Springer B. 1944 Springer B. 1944 He left politics to make great advances in trash television — only to see the two become indistinguishable. In 1998, the year that “The Jerry Springer Show” beat Oprah Winfrey in the ratings, Jerry Springer sat down and tried to imagine his own death. He assumed it would happen during a taping of his show, perhaps the one about the man who cut off his own penis. Springer’s body would go tumbling through the live studio audience that was always chanting “Jer-ry! Jer-ry!” A stripper would attempt to revive him, unsuccessfully. Springer would then appear before God, who would decide if he belonged in heaven or hell. And what would Springer, a German Jewish immigrant, say to this Christian God in defense of himself? “I never planned to become the titan of trash,” Springer wrote, describing this fever dream in his autobiography, “Ringmaster!” After all, Gerald Norman Springer was born in an underground bomb shelter in London during the Second World War, and arrived in New York by boat at age 5. In Kew Gardens, Queens, while his father sold stuffed animals on the street, Gerald fell in love with baseball and American politics. As an undergraduate at Tulane University in New Orleans, he rode the bus to Mississippi to register Black residents to vote, and later took a break from law school to work on Robert F. Kennedy’s presidential campaign. By 25, as a young attorney in Cincinnati, Springer ran for Congress as an antiwar candidate. “He sounded like Bobby Kennedy, and kind of looked like him too,” Jene Galvin, a close friend who met Springer around this time, told me. Springer lost, but was soon serving as a member of the city’s council, and then mayor. He opened health clinics in low-income neighborhoods and negotiated the end of a transit strike. In other words, he was a decent guy. Sure, he enjoyed the spotlight of local politics, perhaps a little too much. But was this how it was all going to end — talking to a man who tried to flush his manhood down the toilet? He knew where the road split, of course. After he had a failed run for governor in 1982, NBC’s Ohio affiliate offered him the anchor chair. It sounded too fun to resist. Springer still got to talk about the issues, and ended up winning 10 regional Emmys in 10 years, including one for a special report that had him living on the streets as a homeless man for a week. “The Jerry Springer Show,” when it premiered in 1991, wasn’t supposed to be what it ultimately became. He was meant to replace Phil Donahue. Springer started out with shows about the Waco siege and the Iran-contra scandal. But the ratings weren’t there. That’s when Richard Dominick, a veteran of supermarket tabloids that reported on aliens and toaster ovens possessed by the devil, was brought on as executive producer for a revamp. Springer didn’t fight it. He played along. He interviewed porn stars who set world records, child alcoholics, conjoined twins, people who self-amputated their limbs and married farm animals and their own cousins. Audiences were riveted. It turned out they also loved a good brawl. In 1994, Steve Wilkos, a Chicago policeman, had just finished a patrol shift when his buddy Mike told him about a side gig. The next day, Wilkos was playing referee during an episode of “Springer” that featured members of the Ku Klux Klan. Wilkos would eventually become the show’s head of security, incurring more than a few battle wounds during his tenure: bites, kicks, a concussion from a chair thrown at his head, a sprained groin and multiple back injuries that resulted in two surgeries. By that point, the television audience had ballooned to eight million people. Catholic priests staged protests, and Chicago’s City Council tried to get the show taken off the air. Springer tirelessly defended it, railing against what he saw as elitism. But privately he was more forthcoming. “The show became stupid stuff,” Galvin told me, “and Jerry always described it as stupid stuff. He wasn’t ashamed of it, made no excuses for it. But he knew what it was and didn’t think it was something it wasn’t.” Friends say Springer stayed with the show for his family. Many of his relatives back in Germany had been killed in the Holocaust. There was a story Springer liked to tell about how his father kept a Chevrolet in the garage of his Queens apartment building long after he could no longer drive because he wanted to make sure he had a getaway plan should he need it. Springer was married by now, and he had a daughter with disabilities. Financial security had become important. “It was a job for him,” said Wilkos, who became a friend. “But I think there was always an underlying desire in Jerry to get away from the show. The number one thing in his life was politics.” Springer tried to find his way back. In 2000 and 2004, he explored running for a Senate seat, spending $1 million and seven months on the latter campaign. He owned a private jet and crisscrossed the state of Ohio giving speeches and gauging support in areas many politicians didn’t travel, tapping into the same audience that loved his show. “He could go into what we today call Trump country and get the same people that Trump could get,” said Galvin, who worked on both campaigns. At one point, it got so real that Wilkos, afraid he would soon be out of a job, got his real estate license. But each time the filing deadline approached, Springer wouldn’t get in the race. “He’d run up to the water, like on a beach, and then run back,” Galvin said. Springer decided that a political career wasn’t possible so long as his show was on the air. He feared the public wouldn’t be able to separate his political message from the spectacle he conducted on TV. So he stayed in his lane. He did “Dancing With the Stars” and hosted “America’s Got Talent.” In his autobiography, guessing what his obituary might say, he wrote, “Springer’s death comes none too soon for those who saw him as the godfather of Western civilization’s demise.” In reality, his critics were kinder. They credited him with showing us the true might of the American populace and anticipating reality TV. You need look no further than Bravo’s popular “Real Housewives” franchise to see the legacy of his show — in Teresa Giudice flipping tables and chairs; in producers stepping in to break up catfights; in Mary, the churchgoing Salt Lake City housewife, who married her step-grandfather. Springer hosted his show until 2018, long enough for him to see a reality star win the presidency, despite the daytime-TV tawdriness that surrounded the campaign. Springer was a fierce critic of President Donald Trump — “Hillary Clinton belongs in the White House. Donald Trump belongs on my show,” he tweeted — but he also saw it as an opening. Did he give up too soon? Was someone like Springer electable now? In 2017, not long before his show went off the air, Springer explored his final bid for office. He set his sights on the governorship of Ohio, the very race he lost in 1982 that resulted in his TV detour. He hosted fund-raisers, met with Democratic power brokers and joined union workers in a Labor Day march in support of a $15-per-hour minimum wage. “I could be Trump without the racism,” he told The Cincinnati Enquirer. But in the end, he backed away again. He was too old now, and he had to think of his family. The moment, as he saw it, had passed. Irina Aleksander is a contributing writer for the magazine. Her last article was about Oscar campaigns and the strategists who run them. Harry Belafonte B. 1927 Belafonte B. 1927 He understood the power of celebrity to help force change for Black Americans. In the middle of a school day in 1943, Harry Belafonte walked into a Harlem movie theater and sat for a showing of the Humphrey Bogart World War II film “Sahara.” He struggled with undiagnosed dyslexia and was blind in one eye, difficulties that contributed to him skipping lessons and, eventually, dropping out of high school. The 16-year-old Belafonte was transfixed by Rex Ingram, a Black actor who played a Sudanese soldier. One scene — the camera zoomed in on Ingram’s face as he suffocated a Nazi soldier — led the boy to enlist in the United States Navy. World War II forced Black servicemen into the most cruel American Catch-22: Risk death in Europe only to return home to a meager second-class citizenship reinforced through racial violence. Armed service turned some Black soldiers into intellectuals, though. Older servicemen passed young Belafonte political pamphlets. He had already had something of a political education back home, where his Jamaican-immigrant mother introduced him to the activist Marcus Garvey. Brothers helped him build on that by introducing him to W.E.B. Du Bois, whose 1940 autobiography, “Dusk of Dawn,” was given to him by a colleague. Belafonte learned how to find more books by the scholars in Du Bois’s footnotes at the Chicago public library, where all he needed was his dog tags to work his way through new thinkers. After 18 months of service in the Navy, Belafonte returned home to Harlem. He eventually got work as a janitor in a few apartment buildings that his stepfather worked in, and a tenant gifted him two tickets to see the play “Home Is the Hunter” at the nearby historic American Negro Theater. Entranced by the community and collaborative spirit of the show’s production, he decided to volunteer as a stagehand for the Theater, where he met his fellow actor and friend Sidney Poitier. He’d soon go from stagehand to drama student at the New School to starring in Off Broadway productions. After a manager and nightclub owner named Monte Kay saw him singing in a production of “Of Mice and Men” (in a musical role that had been written to accommodate Belafonte’s talent), Belafonte secured a slot at the Royal Roost. On his first night singing there, he shared the stage with the jazz great Charlie Parker. That propelled him to a singing career in local clubs, where he could make as much as $350 a week. Eventually he began performing in the South, where the indignities of touring and lodging in segregated spaces set him on an activist path. Between 1946 and 1960, Belafonte established himself as the first Black megastar. In 1954, he won a Tony Award for his performance in the Broadway musical “John Murray Anderson’s Almanac.” In the same year, he starred alongside Dorothy Dandridge in the movie musical “Carmen Jones.” Belafonte’s third album, “Calypso,” released in 1956, sold more than one million copies. He eventually became one of the highest-paid Black performers in American history. Belafonte’s star power and political awareness came together in his civil rights activism. After personally inviting Belafonte to hear a sermon of his at Harlem’s Abyssinian Baptist Church in 1956, the Rev. Dr. Martin Luther King Jr. requested private time with him. In a conversation that Belafonte described as lasting three hours but feeling like 20 minutes, he realized that he was in the presence of greatness. For the next dozen years, Belafonte made King’s mission his own. In August 1964, James Forman, a young civil rights activist and leader at the Student Nonviolent Coordinating Committee, reached out to Belafonte during the Freedom Summer. There was a problem: At the start of a large voter-registration drive aimed at registering Black Americans in Mississippi, Andrew Goodman, James Chaney and Michael Schwerner disappeared. Forman informed Belafonte that he had a crisis on his hands: If the other volunteers ran scared, the Ku Klux Klan would be encouraged to believe that murder could extend segregation’s life. He needed money to house and feed the activists risking their lives for the franchise. Belafonte and Poitier boarded a small Cessna headed for Greenwood, Miss., carrying a black medical bag stuffed with $70,000 in small bills that he had raised. Belafonte joked that while the Klan might murder one famous Negro, they’d be unlikely to murder two, particularly the two most famous Black actors in the world. After their plane skidded onto the runway, they jumped into a beat-up jalopy and drove off into the night, with the Klan trailing them in pickups. And like a righteous “Stagolee,” once they were safe, Belafonte entered a barn filled with volunteers and sang his hit “The Banana Boat Song,” with its famous refrain — “ Day-o, day-o ” — replaced by “ Freeeedom, Freeeeedoooom, ” before opening the black bag and dumping tens of thousands of dollars onto a table. Somehow, Belafonte managed to juggle such radical activism with broad mainstream appeal throughout his career. He understood that show business could be as good a tool in the fight against segregation as money. In February 1968, he took over for Johnny Carson on “The Tonight Show” for one week. At the time, televisions all across America would be tuned into Johnny Carson five nights a week. Belafonte seized upon that ubiquity, turning the broadcast into the most integrated show in America, a front in the fight for Black rights. Belafonte the artist and autodidact, who could riff extemporaneously on the work of contemporaries like the filmmaker Gillo Pontecorvo (“The Battle of Algiers”) and the writer Gabriel García Márquez, put his skills as an interviewer on full display. He called his week on the show a “sit-in”; leaning on his extensive Rolodex, he brought some impressive guests to help him, including Aretha Franklin, Wilt Chamberlain, Robert F. Kennedy and Dr. King. Belafonte didn’t just invite them on as guests — they were friends — and in talking to them on air, he was beaming Black camaraderie and politics right into people’s homes. He foregrounded that sense of intimacy by airing something special: footage of himself and his family on vacation. And for a moment, the face of a beautiful and smiling Black man was the last image America saw before it went to sleep. Reginald Dwayne Betts is the founding chief executive of Freedom Reads and a contributing writer for the magazine. He last wrote about Elijah Gomez, who was shot while walking home from school. Jim Brown & Raquel Welch B. 1936 and 1940 Brown & Raquel Welch B. 1936 and 1940 They were typecast as sex symbols, but when they appeared together in a movie, that sexiness became radical. Boy, the movies can be cruel. Even if your timing is perfect and you’ve got a swear-to-God starriness about you, once somebody puts you on the poster for something called “One Million Years B.C.” looking 50 feet tall and dressed in wild-woman rags — rags you’ve successfully managed to fill out — there’s just no going back. You’re a bombshell now, an offer you can’t defuse. This was 1966. Raquel Welch was 26 and never seemed to stop working, in junk mostly even though nothing about her footed that particular bill. The daughter of a Bolivian aeronautical engineer, she had come to Hollywood after doing weather forecasts in San Diego, her hometown. (Tejada was her maiden name.) That kitschy B movie (kitschy hit B movie) helped make an eternal item of her. For nearly five decades, Welch was assigned a “sex symbol” lane, and she filled that out too. When Playboy published its “100 Sexiest Stars of the 20th Century,” she was third, equidistant between Marilyn Monroe and Cindy Crawford. That was 1998. By then, Welch had already made her peace. She had put out the “Raquel: The Raquel Welch Total Beauty and Fitness Program” video at 44, her hair short and “wet look” glamorous, her bathing suits busy, her attitude serene. (“Let’s get in shape,” she coos.) She had been on Broadway a couple of times, twice replacing Lauren Bacall in “Woman of the Year,” a proto-feminist romantic comedy role that Katharine Hepburn made classic, and later taking over for Julie Andrews in the musical gender farce “Victor/Victoria.” Ideal vehicles for the continental sharpness of her West Coast diction and comic timing whose speed nobody ever bothered to clock; occasions, too, to consider lanes not completely filled. In this, she had a soul mate. Back in the old exploitation-film days, very occasionally, a producer would have the good idea to match what Welch had with another “use what you’ve got” star. In “100 Rifles,” it was Jim Brown. This was 1969, and a dud of a western became about the time two pinups — her, reluctant; him, ready — found themselves pinned to each other. Brown had already quit his running-back job more or less from the set of “The Dirty Dozen,” one of the biggest movies of 1967. He was 30 and still in the prime of his football career, maybe the best career of just about anybody to enter the N.F.L. But he didn’t like it when the Browns’ owner, Art Modell, threatened to fine him for missing training camp. He didn’t like the way the rest of front-office football treated him, either. He was more than a body that the Browns owned and that the coach Paul Brown controlled. “Paul and the other coaches didn’t like this about my attitude, didn’t like that,” Brown wrote in his 1989 memoir, “Out of Bounds.” “But they loved the damn performance.” He went on: “If my mind was limited to football, per the desires of the Cleveland Browns staff … what type of man would that make me? My attitude was shaped by personal conviction. It was shaped by society and my role in that society. It was shaped by being Black in America in the 1950s. And if it wasn’t for that ‘attitude’ that people spoke of, I never would have made it to the Cleveland Browns.” Still, he really did dig performing. And even though he couldn’t deliver a line as smoothly or as confidently as he could sail to the end zone, Brown definitely had something. There was a notion back then that he could be the new John Wayne, but he didn’t carry many westerns. Instead, he led a vanguard of virility during what got called the blaxploitation era, in which the movies were contemporary and most of the heroes thrived outside the system. Brown was cool, passive yet never a complete blank, the rare beefcake whose stolidity has soul. It shouldn’t have been radical, putting this sexy, polemical Negro in a movie with a white woman, as Welch was perceived as simply being. The radical part wasn’t just their being in the same movie. They were in each other’s arms. They were in the same bed. For the last 30 minutes of “100 Rifles,” they’re supposed to be a couple. A blurb of the plot would go something like this: Arizona sheriff chases part-Native American bandit to Mexico, gets mixed up in revolution. Burt Reynolds is the bandit. The revolutionaries’ leader is Welch. Brown plays the sheriff. And after a triumphant sacking of the Mexican general’s ample headquarters, the swaggering sheriff finds himself alone with the comely revolutionary. It’s about 70 minutes in, and Brown’s shirtless for the first time. (A blaxploitation movie would never make you wait that long.) Welch has just bandaged his gash. For his solidarity, she thanks him with a kiss. And a peephole is mistaken for a door: He grabs her; she puts up violent resistance. “No,” she begs, “not like this.” He reconsiders and releases her. She’s attracted to him, but what she won’t be is manhandled. He gathers as much and proceeds to gently unlace her top. She turns around. Their lips meet before they tumble onto a bed, whereupon Welch holds her mouth in an ecstatic “o.” From all the way over here in 2023, this encounter probably looks like what it is: a timidly shot, badly lit, vaguely committed love scene. But in 1969, just two years after Sidney Poitier caused a national sensation as an angelic doctor engaged to a white girl, you were still likelier to see a Black man unlace some white man’s shoe. Brown writhing on top of Welch? Welch cooking him dinner and telling him she’s his? Huge deal. The movie knows this, because after a climactic showdown with the military, Welch’s character turns up dead — nominally martyred for her people but, really, murdered for the American sin of bonking a Negro (I suppose for patching him up, loving and feeding him too). But the climate was changing. Audiences hungered to see erotic taboos smashed. Black audiences hungered to see Black people smashing. The more absurd, the better. (That very spring, Dionne Warwick made plantation love to Ireland’s Stephen Boyd — in “Slaves.”) The “100 Rifles” shoot itself was fraught between Welch and Brown. Burt Reynolds claimed he was their referee. Brown didn’t dispute that a ref was necessary. “The thing I wanted to avoid most was any suggestion that I was coming on to her,” he said at the time. “So I withdrew.” Before the film’s release, Welch concurred that things were tense. Maybe it was because Tom Gries, the director, wanted to shoot that sex scene on the first day of production. “There was no time for icing — and it made it difficult for me,” she told Army Archerd in Variety. She remembered being “feisty”; Brown, she claimed, was “very forceful.” Welch died on Feb. 15, two days before Brown turned 87. By the middle of May, he was gone, too. “100 Rifles” is the only time her lane merged with his. In theaters, the movie was a howler. But in my living room, it had become newly poignant. These two symbols of sex, two movie stars who had what, for a screen art, constitutes true talent: They were hypnotically, outrageously watchable. The angles of her face, the warmth in his eyes, her curves, his hands — flavor for the eye. Once, there they were, making a tender, visceral connection, two pinups, pinning us to them. Wesley Morris is a critic at large for The Times and a staff writer for the magazine. He is the only person to have won the Pulitzer Prize for criticism twice. Marianne Mantell b. 1929 Mantell b. 1929 No one would listen to her, but she intuited the power of audiobooks. It was 1952, and Marianne Mantell (then Marianne Roney) was at lunch in Manhattan complaining about work. She wrote obscure liner notes for record labels where her bosses ignored most of her suggestions. She was 22, ideas bubbling out of her, but she was stuck. Her lunchmate, Barbara Cohen, was languishing in an unfulfilling job of her own in book publishing. Marianne and Barbara had met in an ancient-Greek class at Hunter College, where they bonded over reading Aeschylus as the poet wrote it. Their Greek and Sanskrit hadn’t helped them much yet in the Manhattan job market. Their lunch conversation turned to poetry, as it often did, and Cohen mentioned that Dylan Thomas, the Welsh poet who had recently published “Do Not Go Gentle Into That Good Night,” was reading that very evening at the 92nd Street Y. “Let’s go,” Cohen said. “Let’s record him,” Mantell replied. Mantell had an intuition that the poet’s voice would be worth preserving, and that huge numbers of people would want to hear poets reading their poetry as much as she did. Her record-label bosses had disagreed when she pitched the idea, but here was a chance to try without them. Mantell and Cohen raced uptown to the reading hoping they would somehow get a chance to buttonhole Thomas. He was a sensation, the closest thing the poetry world had to a rock star. He had spent the past few years careening through literary society — drunken soliloquies, punches thrown at parties — and crowds showed up to witness the havoc as much as the poetry. Thomas’s performance that night stopped the two women cold. “We had no idea of the power and beauty of this voice,” Cohen said years later. “We just expected a poet with a poet’s voice, but this was a full orchestral voice.” They passed along a note explaining their idea in hopes that Thomas would get in touch. “We just signed our initials,” Mantell said, “so he wouldn’t know we were unbusinesslike females.” In retrospect, that might have been a tactical error. “Little did we know he would have been extremely interested if he had known that we were young and unmarried,” Cohen remembered. They persisted, calling his room at the Chelsea Hotel over and over. No luck. Finally, several days later, Cohen set her alarm for 5 a.m. to try to match Thomas’s hours and — huzzah! — he answered the phone, still wobbly from a big night out. The famous poet agreed to meet them in daylight at the Little Shrimp, a restaurant attached to the hotel. The poet’s wife, Caitlin, joined them, apparently suspicious of two 22-year-olds who had been so eager to meet her husband. Caitlin’s temper was the stuff of legend: At one party where Thomas was flirting with another woman, Caitlin came up behind her and, without saying a word, stubbed her cigarette out on the woman’s hand. Decades later, Cohen remembered the odd encounter at the Little Shrimp: “His wife said, ‘I just washed his hair — isn’t it beautiful?’ And it was! It was gleaming gold.” They won Thomas over, and made plans to meet at a recording studio. Thomas didn’t show up. They scheduled another session at Steinway Hall on 57th Street, and this time Thomas arrived with a sheaf of poems under his arm. But there was still a problem — a recording of the five poems wouldn’t be long enough to fill both sides of a record. Thomas mentioned that he had recently written a story for Harper’s Bazaar, a riff on Christmas memories. They scrambled to find a copy of the magazine, and for the B-side of the album, they recorded Thomas reading “A Child’s Christmas in Wales.” Now they had a record, but they needed a record label. On the subway, they ticked through English literature in search of a name. They settled on Caedmon — named for a seventh-century cowherd poet. They were novices in publishing, recording and business affairs, but they scratched together some $1,500. Thomas agreed to a $500 advance, plus royalties. When they released the Dylan Thomas record later that year, it became their first hit, going on to sell some 400,000 copies through the 1950s. Cohen and Mantell would turn Caedmon into the leading publisher of spoken-word audio, featuring an Olympian roster of authors reading their work aloud: T.S. Eliot, Zora Neale Hurston, William Faulkner, Sylvia Plath, James Joyce. The desire to bottle up the poet’s voice wasn’t a new idea so much as a very, very old one. Mantell and Cohen were reaching across time to the prehistoric hunters who told stories around flickering fires; to Homer, who sang of kings and monsters; to generations of parents who whispered fables to their children in the dark. Caedmon, which is now an imprint of HarperCollins, would go on to earn dozens of Grammy nominations. In 2008, the Library of Congress inducted the Dylan Thomas album into the National Recording Registry, saying “it has been credited with launching the audiobook industry in the United States.” In 2023, an estimated 140 million people listened to an audiobook. “A poem,” Mantell said, “is meant to be heard.” Sam Dolnick is a deputy managing editor for The Times. He last wrote about Darius Dugas II, who was killed by a stray bullet in 2022. Dr. Susan Love B. 1948 Susan Love B. 1948 She was dismissed by her male colleagues, but she knew there was a better way to treat breast cancer. Susan Love was a woman with a calling. In 1968, at 20, she entered a Roman Catholic convent, but the demands of a postulant proved too restrictive. She left within months to follow her true path: becoming a doctor. She would need that sense of destiny, every shred of determination, to get there. When informed of her plans, as she recalled in a 2013 documentary, her undergraduate adviser at Fordham said applying to medical school would be tantamount to murder, since the boy she would displace would be forced to fight in Vietnam. Love went ahead anyway, attending Downstate College of Medicine at SUNY, where a generous 13 percent of students were female; at the time, the quota was often 5 percent. Women were concentrated, by choice and by chauvinism, in pediatrics or OB/GYN — still two of the most common specialties — but Love pursued general surgery, drawn to its challenge and variety (according to her wife, Helen Cooksey, she also flirted with cardiology, finding irresistible the idea of sending heart patients to Dr. Love). She was not met with enthusiasm. Interviewing for residencies, she was asked at Columbia what kind of birth control she used; at Brigham she was asked how she expected to operate while pregnant. Once she did land a spot, male colleagues would run a hand down her butt after she scrubbed in for a procedure; anesthesiologists, unable to conceptualize a female doctor, would ask when the surgeon was coming. Despite all of that, she rose to become chief resident at Beth Israel Hospital in Boston but was not offered a single academic job upon completing her training. In private practice, the only cases referred to her were patients with breast problems. It was bottom-of-the-barrel stuff, considered more mechanics than art, and more suitable for a woman. The “one step” approach to breast cancer was still common then: a woman with a lump would be sent into surgery, waking with a bandage on her chest. Her only hint at the outcome would be the clock: If an hour had elapsed, she’d had a biopsy. Three hours: Her breast was gone. Those patients needed basic information, and they needed an advocate. Love realized she could provide both. At tumor board meetings where specialists discussed the protocol for individual cancer cases, she fought for breast-conserving lumpectomies with radiation, which Italian studies had shown were as effective as mastectomies for early-stage disease. Other surgeons claimed the data was irrelevant to Americans. She persisted, adding that women deserved a choice, and was again labeled a murderer, accused of placing “vanity” over lives. By 1990, breast-conserving surgery had become, and remains, the standard of care. That same year, Love published her indispensable guide to all things mammary, “Dr. Susan Love’s Breast Book.” It’s hard to overstate its impact. Love’s clear explanations of screening, diagnosis and treatment allowed women to replace their dependence on medical eminence with actual medical evidence. She went on to co-found the National Breast Cancer Coalition, which by 1993 had successfully lobbied to raise federal funding for research more than tenfold, to $300 million. She then helped create a program for women living with cancer to have an impact on how that money was allocated. That activism coincided with the rise of pink ribbons and awareness campaigns, which, Love felt, eventually became victims of their own success. The newly diagnosed were infantilized with gifts of pink teddy bears or turned into triumphant “she-roes.” The potential for screening to save lives was overpromised, especially for young women. Love’s book is particularly helpful in untangling that complicated knot. “The medical profession and the media have sort of colluded to make it sound like if you do your breast self-exam and you get your mammogram, your cancer will be found early and you’ll be cured and life will be groovy,” she once said. Mastectomy rates again climbed and more women requested medically unnecessary bilateral amputations for early-stage disease in one breast. And still, more than 42,000 American women die annually of breast cancer. As head of her own foundation, Love was convinced the focus ought to be on preventing the disease altogether — perhaps finding a vaccine like the one that stops HPV-related cervical cancer or investigating whether, as with stomach cancer, a treatable bacteria plays a role. Even in her final days, she was asking: How does breast cancer start? In the introduction to the latest edition of “The Breast Book,” released last month, Love wrote that she believed hers would be the generation that stopped breast cancer. Why not? There was a time when she was told she couldn’t be a surgeon or that she couldn’t marry her partner. Change can be slow but also very quick. “That’s what drives me,” she liked to say. “As a good Catholic girl, this is the mission I was given, this is my role in this life, and I’m going to do it if it kills me.” Peggy Orenstein is the author, most recently, of “Unraveling: What I Learned About Life While Shearing Sheep, Dyeing Wool, and Making the World’s Ugliest Sweater.” She writes frequently about breast cancer. Harry Lorayne B. 1926 Lorayne B. 1926 His prodigious memory grew out of childhood deprivation. And landed him on ‘The Ed Sullivan Show.’ Harry Lorayne grew up Harry Ratzer, in a tenement on the Lower East Side of Manhattan. He often described his parents as “professional poor people,” and his childhood was subsumed in a bleak form of emotional poverty, too. Neither parent ever told him they loved him. He was given all his meals alone. He was never taught to brush his teeth and did not even discover tooth-brushing, as a concept, until he was a teenager. “I could have been a stranger in the house,” he once recalled. He was hugged, as a child, exactly once. (When he was 12, his father took ill and reached down to embrace Harry, who was asleep on the couch, before he left for the hospital. His father then jumped out of his 16th-floor hospital room and died.) Instead, Harry was beaten — with seething regularity. Around age 10, finding that he couldn’t keep straight any of the material being taught to him in class, he kept failing his daily quizzes. And each time he failed, his father hit him. Harry became flooded with anxiety, his stomach clenching every morning before school. Eventually, he sought out books on memory training and discovered techniques dating back centuries. Prime among them was the use of “mnemonic pegs,” whereby you encode abstract information into concrete images. Studying for one quiz, for example, Harry found that he could remember the capital of Maryland — Annapolis — by picturing an apple falling on the head of a classmate named Mary. An apple is landing on Mary’s head : An-apple-is. Mary-land. Soon he was acing his quizzes. The turnaround astonished him. He would remember how to remember for the rest of his life. Maybe you remember him. He was a fixture of late-night television, making two dozen appearances on Johnny Carson’s show alone. During one of his first high-profile gigs, in August 1964, he roamed the aisles on “The Ed Sullivan Show,” pointing at audience members one by one. “Mrs. Schiffman, Mrs. Kent, Mrs. Lyons,” he began. “Miss Dempsey right over here. Mrs. Newman, Mr. Michael Newman.” This was his signature showstopper: having met everyone in the studio just before airtime, he was now reciting their names. He called them out authoritatively, like God naming the animals, but also unthinkably fast, like an auctioneer, or an auctioneer attending to last-minute bids while fleeing a fire. And in fact, the stunt on “Ed Sullivan” was twice as hard as it looked. Lorayne later revealed that, after successfully reciting the names of several hundred people during a rehearsal earlier that day, he was dismayed to watch that audience exit the studio and a throng of completely different faces, with completely different names, file in for the actual show. He recited sequences of random numbers, random words. He regurgitated chunks of phone books. Merv Griffin once quizzed him on the contents of an issue of Time magazine. (Page 85? A review of a new biography of Agatha Christie, Lorayne said, then relayed the review’s headline, the author and publisher of the biography, the book’s page count and retail price too.) He was also a renowned magician, a master of close-up card tricks; a video produced in 2016 for his 90th birthday featured tributes from giants of modern magic like David Copperfield and Penn & Teller. But Lorayne was always wary of outing himself as a magician to the general public. He worried people would mistake his memory feats for just another trick instead of a thoroughly teachable skill. He wrote more than 20 books on memory training and produced a slew of other educational materials. His celebrity students included Anne Bancroft, Alan Alda and Michael Bloomberg. But Lorayne seemed focused, most of all, on providing an edge to ordinary businesspeople and students. “We have potentially brilliant surgeons out on the streets here who will never become doctors,” he once griped, “because they can’t remember enough to pass the tests.” Forgetting was a barrier; remembering was a key. Now he exists only as memory. He’s remembered as a showman, a salesman, an evangelist — a relentless workaholic who spoke in a quick, slick word cloud of canned anecdotes, wisecracks, sales pitches and boasts. But a passage from his 1961 book, “Secrets of Mind Power,” revealed, fleetingly, a philosophical side as well. “We are all, each and every one of us, completely and irrevocably alone,” Lorayne wrote. But he had stumbled on a way to “relieve that loneliness just a bit.” The key was to look outward, with a wide-open mind: “I have never yet met anybody, from any walk of life, from whom I haven’t learned something,” he explained. “This could not happen if I weren’t listening — I mean really listening — to them.” This is how memory works, Lorayne always insisted in his tutorials: You can’t recall anything if you don’t pay attention in the first place. Remembering is a byproduct. Living is the point. Jon Mooallem has been a contributing writer since 2006 and is the author of three books: “Wild Ones,” “This Is Chance!” and “Serious Face.” Burt Bacharach B. 1928 Bacharach B. 1928 Often out of step with his musical times, he composed songs for the ages. It could sound like an odd refrain — “I never felt like I fit in anywhere” — coming from someone who, viewed perhaps over the rim of a martini glass, could look like the coolest guy in the room. He was suave, handsome, married to a glamorous actress. And he had a seemingly renewable lode of gold records, so many that in the summer of 1970, Newsweek put him on its cover, as if to say, Here is the face of popular music. And yet Burt Bacharach was something of a misfit, because the songs he composed sounded nothing like what was hip at the time, not like the Beatles, not like Motown, not like Dylan, the Stones, the Doors, the Dead. If anything, they were an antidote to all that. Think of them: songs like “What the World Needs Now Is Love,” “I Say a Little Prayer,” “Raindrops Keep Fallin’ on My Head” — all abundantly tuneful, proudly orchestral, mercurially rhythmic and not a single one with a loud electric guitar. Even the edgier songs, like the stately “Walk On By,” were clean and polished. If Tiffany boxes played music, this might have been what emerged. Every true artist probably feels like an outsider at some point, but Bacharach seemed born for the role. He grew up a Jewish kid in a mostly Catholic neighborhood in Forest Hills, Queens. He wanted to be an athlete like his father, who lettered in four sports in college and played professional basketball before professional sports was even much of a thing. But his growth spurt came late, and he was always too small. In high school, he bombed out at just about everything else — he ranked 360th out of 372 students in his class. But there was a piano in the house, and it was his mother, an amateur singer, who encouraged him to take lessons. Finally, here was something he could play competitively. When he decided to have a go at songwriting, in his 20s, the world started bending away from him again. He loved jazz, and the classical composers Debussy and Ravel, but a tectonic shift was happening in popular music, to rock ’n’ roll, for which Bacharach had little affinity, especially in its formative years. “A lot of those songs consisted of just three chords, C to F to G,” he wrote in his 2013 memoir, “Anyone Who Had a Heart.” “If they had thrown in a C major seventh, that would have been a lot more interesting.” He’d land a B-side here or an A-side there, but dozens of other songs went unrecorded, unheard, unloved. They were too old-fashioned or too jazzy, tried too hard or not hard enough. Some years later, when he did achieve what seemed a composer’s dream — a song recorded by Frank Sinatra — the rhythm had been changed from a waltz to the squared-off 4/4, which was considered more palatable to the average listener. But to Bacharach it ruined the song, if for no other reason than it wasn’t how he heard it in his head. Sinatra even told a mutual friend that Bacharach was “a lousy writer.” Bacharach might have remained a journeyman composer if he hadn’t found, in Hal David, a lyricist who could match the growing maturity of his melodies, and Marie Dionne Warrick (later of course Dionne Warwick), a 20-year-old with a Maserati of a voice, a compelling combination of flash and finesse. Now Bacharach could write whatever he wanted and not worry that it would be changed or that a singer couldn’t deliver it, and the troika connected right off the bat with “Don’t Make Me Over.” Written mostly in the very unusual (for a pop song) 12/8 time, the song had a money line that came to be a feminist entreaty but could have also served as Bacharach’s own: “Accept me for what I am/Accept me for the things that I do.” The hits — by Warwick, Dusty Springfield, Gene Pitney, et al. — started to flow, and Bacharach became known as one of pop’s most stickling perfectionists. In the recording studio, “Burt would always ask for one more take,” Warwick, now 83, told me recently. “Then one would turn into 90,000, and we’d end up using the first one anyway.” Still, Bacharach often found himself composing against the current. When the rock band Love recorded his and David’s “My Little Red Book,” they simplified the harmony (or, as a rock-musician friend of mine said, “They took out the fruity chords”). On Broadway, Bacharach and David’s musical, “Promises, Promises,” had a title song that lit warning flares for singers in practically every measure: intervals that crept or leapt, multiple time-signature changes. Jerry Orbach, who had the lead role, told Bacharach, “You’re breaking my back every night with that song.” Rather than make any changes, Bacharach brought in Warwick to coach Orbach. (Her advice on how to sing it? “Very carefully,” she told me.) Orbach, who was in danger of losing his job, not only got onboard but also won a Tony Award for his performance. “Promises, Promises” would be a major influence on the melodious Sondheim masterpiece “Company.” Even Sinatra came around; he asked Bacharach to write an entire album for him. But it never happened. Bacharach was simply too busy. Rob Hoerburger is the copy chief of the magazine and the author of the novel “Why Do Birds.” He has contributed to The Lives They Lived 18 times. Michael Protzman b. 1963 Protzman b. 1963 He was a QAnon influencer whose followers chased his prophecies across the country. If there was one predictable thing about the unpredictable life of Michael Protzman, it was that when he died, nobody would believe it. Nobody, at least, among the followers who were drawn into the world that Protzman conjured online over the last two years of his life. It was a world in which the dead were never truly dead, and everyone always seemed to be someone else in a mask. Protzman was a self-appointed interpreter of the Trump-centered conspiracist cosmology known as QAnon. He was late to the party, attracting attention starting only in the spring of 2021, months after the Jan. 6 riot that seemed at the time to represent the crest of the QAnon wave. But by the time he died, from injuries sustained in a dirt-biking accident in rural Minnesota, Protzman, who had more than 100,000 followers on Telegram, had achieved significance all the same. He was the rare QAnon personality whose flock was willing to follow him offline. In the telling of his family members, Protzman, a demolition contractor in the Seattle area, had for some time been tracing the same path to the far fringe along which he would lead others. His mother told CNN that he nursed financial worries and, after the 2008 economic crash, a conviction that the U.S. dollar would collapse. A preoccupation with Sept. 11 and Sandy Hook conspiracy theories followed. In July 2019, Protzman’s wife, who was in the midst of divorcing him, told the police that for weeks he had been “not showering or working and believing in government conspiracies.” He had already left the demolition business they had shared. Shortly after, he began making prophecies. QAnon originated in 2017 with a series of enigmatic communiqués posted to various message boards by someone — “Q” — purporting to be a government official with a high-level security clearance. The overarching claim is that the world is in the clutches of a vast satanic child-abusing cabal of Democrats, celebrities and business leaders, a plot that Donald Trump and a cadre of elite military officials are working in secret to disrupt. In his livestreams and posts, Protzman elaborated on a loose strand of QAnon apocrypha: the contention that John F. Kennedy Jr., the presidential scion who died in a plane crash in 1999, is in fact still alive, living in disguise and secretly working with Trump to overthrow the forces of evil. Protzman claimed to deduce his revelations through gematria, the practice of extracting hidden meanings of words through alphanumeric ciphers. Maybe it was the mystical overlay, or the odd, hollow-eyed delivery of Protzman’s sometimes hourslong livestreams, the diffident authority with which he sprinkled his sentences with numerological nonsense. Something, somehow, convinced dozens of his followers to travel to Dallas on Nov. 1, 2021, and join him in Dealey Plaza, where President Kennedy was assassinated nearly 60 years before, and where, Protzman told them, he would reappear the following day, accompanied by his namesake son. When no resurrection ensued, Protzman led his followers to the venue of a nearby Rolling Stones concert where, he promised, Keith Richards would reveal himself to be J.F.K. Jr. in a mask. When that did not happen, either, they simply stayed in Dallas, taking up residence for months at the Hyatt Regency. The failure of the prophecy cost Protzman some followers but drew the rest closer. Family members began speaking of parents and siblings who had broken off contact. Protzman’s pronouncements were — even by QAnon standards — violent, antisemitic, apocalyptic. Now there were reports of followers drinking industrial-bleach concoctions, of ominous notes left behind. “I’m going to follow God,” one of them wrote on the cover of a notebook a relative shared with a reporter for Vice. The alarms proved unfounded, but Protzman’s followers never fully disbanded. Dressed in phantasmagorical variations on Tea Party-chic flag-patterned clothing, they were easy to spot at Trump rallies, where they became a small but regular presence: an embodiment of how the former president’s base had taken on a life of its own, one that splayed far beyond mere politics. “We support the Kennedys and the Trumps,” a woman named Tracey told me at a rally in Pennsylvania last year. She was with a friend whom she met at Protzman’s gathering in Dallas; since then, they said, they had gone to see Trump eight times. “There’s a lot of 5-D chess being played right now,” her friend said, portentously. In the days after Protzman’s death, his followers gathered on Telegram. “I think he has been called to do something very, very important,” one woman suggested. “Not sure what to believe,” another wrote, “cause unless I see it firsthand, it’s just too fishy.” Charles Homans covers politics for The Times. For the magazine, he most recently wrote about a politicized sexual-assault case in Virginia. Ian Falconer B. 1959 Falconer B. 1959 He never expected to be a children’s-book writer. Ian Falconer’s first book was self-published in 1996 — printed on laser jet and given to his sister’s family as a Christmas present. The heroine was called Olivia, and she was a piglet — partly because she was named after Falconer’s young niece, who had an adorably upturned nose, and partly because pigs were intelligent, and also an enormous amount of fun to draw. Olivia the piglet was headstrong and stubborn, but funny in her own accidental way, and wildly perceptive about the world around her. She was, in other words, a very real child, despite the hoofs — possibly the realest child to enter the kids’-lit canon since Russell Hoban’s refusenik badger, Frances. All the more wonder then that Olivia came very close to remaining a self-published book forever. “I remember that Ian had the laser-jet printout lying around his apartment, and his friends would pick it up and say, ‘You must take this to an agent,’” recalls his eldest sister, Tonia Falconer Barringer. “Maybe,” Falconer would shrug. He didn’t see himself as a children’s-book author. He was a fine artist, classically trained at the Parsons School of Design in New York and the Otis Art Institute in Los Angeles, and now employed, albeit fitfully, as a costume and set designer for opera and ballet performances. When Falconer did finally arrange a visit with a potential agent, he was told his manuscript could be viable, provided he was willing to partner with a more established writer. “Ian’s attitude was, ‘No, this is my character, and this is my voice, and I don’t want another writer,’” Barringer says. “And that was the end of that.” Falconer plugged on in the theater, creating, among other commissions, the backdrop for a stage adaptation of David Sedaris’s “The Santaland Diaries.” In his spare time, he fraternized with a revolving coterie of famous friends and lovers — among them Tom Ford and the painter and occasional set designer David Hockney, who had helped Falconer get his start in the theater world — and began contributing cover art, reminiscent in palette to the Olivia books, to The New Yorker. Eventually, those covers caught the attention of Anne Schwartz, a veteran editor of children’s books at Simon & Schuster. Would Falconer be interested in illustrating a kids’ book written by another author, she wondered? Falconer wasn’t, but he had brought along a copy of his pig manuscript. “My ship had come in,” Schwartz later remembered thinking. She disagreed that Falconer needed the help of another writer to make the book great. In her opinion, in fact, the book mostly just needed to be cut down — the final version of “Olivia,” which was published in 2000, is roughly half the length of the original draft. “It was a shock for Ian, going from a theater and fine-art guy to a children’s-book author,” says Conrad Rippy, Falconer’s friend and longtime attorney and literary agent. “But it was a pleasant shock, because the book really took off. It hit immediately.” “Olivia” went on to be named a Caldecott Honor Book, one of the most prestigious awards in children’s books, and stayed on The Times’s best-seller lists for more than a hundred weeks. This was testament, argues Caitlyn Dlouhy, another of his editors at Simon & Schuster, to Falconer’s trust in his audiences. “Ian was sure kids are so much more innately intelligent than anyone gives them credit for,” Dlouhy says. “He knew they could understand droll humor; he didn’t have to overexplain it. He knew exactly how much art could say.” Falconer went on to write a small library of books featuring his porcine heroine, including “Olivia in Venice,” about a gelato-soused expedition to the Italian city, and “Olivia Saves the Circus,” in which the protagonist mounts a spectacular one-piglet show that may or may not have taken place entirely in her own mind. Each book serves as a mini-character-study on the mind of a child — rare is the parent who picks up a Falconer book and doesn’t recognize an echo of a conversation she’s had many times before. Falconer never had children himself, but Rippy speculates that may have been a boon: “Unlike a parent,” he says, “Ian had the luxury and ability of removing himself from that situation and processing it. He was just an unbelievably astute and accurate observer of the world around him.” By the time of Falconer’s death, millions of copies of Olivia books were in print, allowing their author a new degree of creative freedom — he could be more discerning about his non-pig-related commissions, like the kaleidoscopically busy set and costume design for a 2015 performance of “The Nutcracker,” at the Pacific Northwest Ballet in Seattle. (In a nice touch, Falconer’s Clara favors bold red and white stripes, as does Olivia, whose portrait was drawn into the set as if she were also watching the show.) “He was at peace with it,” Barringer, his sister, says. “Like, ‘All those years painting and doing sets and costumes, that entire lifetime of work, and I’m going to be remembered for a cartoon pig.’ He thought it was a little funny — and amazing.” Matthew Shaer is a contributing writer for the magazine, an Emerson Collective fellow at New America and a founder of the podcast studio Campside Media. He last wrote about the cardboard industry. Steve Harwell B. 1967 Harwell B. 1967 He was the voice on one of the most popular songs of the ’90s, but a cartoon ogre upstaged him. Hard as it is to remember, Steve Harwell and his band, Smash Mouth, were famous before “Shrek.” Before the 2001 animated comedy made nearly $500 million at the box office, the band from San Jose, Calif., had already ascended from the bog of alt-rock aspirants hoping to cash in on the craze. Their debut album, “Fush Yu Mang,” went double platinum, and in 1999 their monstrously successful single “All Star,” which overwhelmed pop radio, was featured in several movie soundtracks and helped the album on which it appeared, “Astro Lounge,” sell millions of copies. But it took “Shrek” to turn “All Star” into a cultural phenomenon. Harwell founded Smash Mouth after quitting a rap group. He was the new band’s lead singer despite being an unremarkable vocalist: His voice was a blunt, somewhat nasally and abrasive instrument, but it was also inoffensive, even a little goofy. It was perhaps the only kind of voice that could pull off a song like “All Star,” an anthem for outcasts and those bewildered by their own mediocrity. “Sooome-BODY once told me,” the song begins, Harwell already straining against the limits of his capabilities, “the world is gonna roll me. I ain’t the sharpest tool in the shed.” “I’m not going to toot my own horn,” he told Rolling Stone in a 2019 oral history chronicling the conception of Smash Mouth’s greatest hit, “but nobody else could have sang that song.” He was right. Harwell’s sun-fried vocals — you can hear the Bay Area slacker in him — made the song indelible. “All Star” did not need a talented singer to become a huge hit. It needed someone who could sell slackerdom as something not only appealing but aspirational — the stuff rock stars are made of. There’s a video for this song. It features Harwell — wearing Oakley-style sunglasses with a thin chin-strap beard framing his cherubic face, his hair spiked with gel — but it has faded into the cultural ether, overtaken by “Shrek” two years later. The movie’s opening introduces us to its title character, the disgusting but happy ogre with, strangely, a Scottish accent, through a montage set to “All Star.” Harwell sings along as Shrek showers in mud, brushes his teeth with a bug and kills a fish with his farts — a gleeful outsider, and a repulsive one at that. The movie gave “All Star” a second life, cementing it in American pop-music history — and leashing Harwell to the image of Shrek. At the dawn of Web 2.0, social media allowed audiences to pulp pop culture and use it as the raw material for a new currency: memes. “Shrek” pried “All Star” out of the band’s hands and delivered it to an adoring public to do with it what they would. YouTube was lousy with covers and parodies. People put the song in the mouths of everyone from Kermit the Frog to Mario. At the height of mash-up culture, people started splicing “All Star” into other popular songs by the likes of Modest Mouse and Nirvana. By 2013, an entire Reddit community dedicated to “All Star” memes existed. In 2017, Jimmy Fallon did a “Star Wars”-based cover, using clips from the various movies so that the franchise’s cast appeared to perform the song as an ensemble. Harwell might have thought that only he could have brought “All Star” to life, but his voice had now been replaced by another — the internet’s. Though the band’s Twitter account aggressively protected its legacy, Harwell himself rarely spoke ill of “Shrek” or the way the movie altered the song’s trajectory. In tweets, he appeared reliably cheery, whether he was supporting trans rights or posing in photos with his friend Guy Fieri, whom the internet jokingly confused him with. He mostly maintained this affable front despite suffering a devastating blow in 2001: The death of his baby son, Presley, due to complications from leukemia. Though he continued touring and making albums with Smash Mouth, he struggled with alcoholism and its physical toll in the years after that loss. That struggle’s effects were on display in a chaotic performance in October 2021. As the band dealt with a faulty speaker setup, Harwell swayed back and forth, threatened to harm an audience member’s family and at one point gave the Nazi salute. Shortly thereafter, he quit the band. “Ever since I was a kid,” he told fans in a farewell, “I dreamed of being a rock star and performing in front of sold-out arenas and have been so fortunate to live out that dream.” But if Harwell had the privilege of being a rock star, he was among the first people to confront the role’s diminished status in the age of social media. That must have been a wildly alienating first: the experience of having a song that you made famous through your voice and your performance not belong to you anymore — to have a cartoon character supersede you as your creation’s avatar in the world. Ismail Muhammad is a story editor for the magazine. He has written about waves of migration to New York, diversity in publishing and the painter Mark Bradford. William Friedkin B. 1935 Friedkin B. 1935 A director who was equal parts exacting and reckless, he made car chases into art. The stuntman Buddy Joe Hooker came on board about midway through the shooting of “To Live and Die in L.A.” in 1985. For years, the director, William Friedkin, had been thinking about doing a car chase that would surpass the epic one in his breakout 1971 film, “The French Connection,” and this seemed like the right time for it. Friedkin considered chase scenes to be the purest form of cinema, and it’s true that you don’t often find them in other modes of storytelling. But they were also the purest form of Friedkin. For a chase to work — to build and sustain suspense — it needs to be carefully choreographed but feel totally out of control. These were the two sides of Friedkin, an equally exacting and reckless filmmaker who was perfectly happy to break laws, endanger civilians (himself included) and spend money he didn’t have to get a shot he thought he needed. Friedkin decided he wanted to make movies after watching five consecutive showings of “Citizen Kane” on a Saturday in a revival theater on the North Side of Chicago. He was in his mid-20s then and working as a director at a local TV station. A chance meeting with a death-row priest soon after led to his first film, a documentary about an inmate, Paul Crump, who was awaiting execution after being convicted of committing a murder during the robbery of a meatpacking plant. The movie wasn’t released at that time — and Friedkin would come to rue its incompetence — but its legacy can nevertheless be seen in his subsequent films, which he shot on location in a vérité, documentary style, with a lot of handheld-camera work, natural light and long, uninterrupted takes. He was on unemployment benefits when, after two years of trying, he sold “The French Connection,” a caper about the bust of an international heroin ring. It was not an ordinary cop film. The main character, Jimmy (Popeye) Doyle, was moody, obsessive, racist and violent. Friedkin rehearsed the tabloid columnist Jimmy Breslin for the role before reluctantly hiring Gene Hackman. “Bullitt,” starring Steve McQueen, had come out only a few years earlier, giving birth to the modern car chase with an electrifying 10-minute scene through the hilly streets of San Francisco. Friedkin had never directed a car chase, but he wanted to have one in “The French Connection,” and he wanted it to be different from the one in “Bullitt.” He and his producer, Philip D’Antoni, came up with an idea: Instead of chasing another car, Doyle would chase an elevated subway train that had been hijacked by one of the bad guys. To get permission to take over a train and a segment of tracks in Brooklyn, they bribed the head of the transit authority with $40,000 and a one-way plane ticket to Jamaica because he was sure it would cost him his job. Unhappy with how the shooting was going, Friedkin taunted the stunt driver, Bill Hickman, over drinks one night: “Bill, I’ve heard how great you are behind the wheel, but so far, you haven’t shown me a damn thing.” Days later, Hickman was racing, unpermitted, through 26 city blocks of live traffic at 90 miles an hour. Friedkin manned one of the cameras himself from the back seat of the car, wrapped in mattresses and pillows. The movie won Friedkin an Oscar for best director, and he became an A-list talent, a charter member of the New Hollywood movement that was introducing darkness and moral ambiguity to a storytelling medium that had historically preferred heroic heroes and villainous villains. Even within this unconventional group, Friedkin stood out. Not only had he never gone to film school; he hadn’t attended college. His next movie, “The Exorcist” — arguably Hollywood’s first modern blockbuster — made Friedkin one of the hottest directors in the world. His stratospheric rise ended abruptly just a few years later with his 1977 film “Sorcerer,” the story of four men on the run from the law who find themselves in a remote, impoverished village in Latin America. The bulk of the action follows the men on a virtual suicide mission to drive two explosives-laden trucks across the jungle. It’s a kind of deconstruction of the traditional car chase; between their combustible cargo and the bumpy dirt roads, the only option is to drive slowly and carefully. “Sorcerer” opened weeks after “Star Wars,” the melodramatic sci-fi blockbuster that heralded the return of clear-cut heroes and villains to Hollywood, and it utterly bombed. It has since been rediscovered — it’s a remarkable movie, sweaty with suspense and despair — but it sent Friedkin’s career skidding. He would never fully recover, but “To Live and Die in L.A.,” a neo-noir movie about a Secret Service agent’s obsessive pursuit of a counterfeiter, went at least partway toward arresting his slide. Hooker had never worked with Friedkin, and he was both excited and a bit wary when he got the call for the job. He was a huge fan of the chase scene in “The French Connection,” but he had also heard that Friedkin fired half the crew on “Sorcerer.” Friedkin told Hooker that he expected the scene to take about a month to film; the budget would be whatever it required. “Hook,” Friedkin said, “I don’t care what we shoot. If it isn’t better than ‘The French Connection,’ it’s not going in the film.” Then Friedkin told Hooker what he wanted to do: a high-speed chase going against rush-hour traffic on a California freeway. “The minute he said it, I just went, ‘Yes, we can definitely do that,’ having no idea what I was getting myself into,” Hooker recalls. It would require 40 handpicked stuntmen and at least 200 cars. It’s the climax of a nearly eight-minute chase that first takes you through a sun-soaked, unglamorous suburban Los Angeles. Some buffs still argue that the chase scene in “The French Connection” is better, but Friedkin considered this one good enough to use, which, as far as Hooker was concerned, was the highest form of praise. Jonathan Mahler is a staff writer for the magazine. He has previously written about David Zaslav’s takeover of Warner Bros. and the succession battle over Rupert Murdoch’s media empire. Angus Cloud B. 1998 Cloud B. 1998 A mellow guy discovered outside a downtown bookstore, he became the heart of ‘Euphoria.’ The night the actress and casting scout Eléonore Hendricks met Angus Cloud, she’d just left an acting class in Manhattan. Hendricks was heading to the West Fourth Street subway station in the Village when she spotted them: two slightly roguish-looking young men in front of Mercer Street Books. There was something about the 19-year-old Cloud, strolling with his friend, that stopped her in her tracks. “It’s one of those ineffable things you can’t always put words to,” she told me. “Something happens in your body and your mind and your eyes, physically, when you see someone who’s so beautiful or so striking,” she says. He stunned her, though she was able to recover quickly enough to give him her spiel — she was casting a show for HBO, and she thought he’d be perfect for it. The next day, Cloud, who had recently moved to Brooklyn from Oakland, came to an office to meet Hendricks’s boss, the casting director Jennifer Venditti, who liked his “soulfulness” and inquisitive spirit. “What I loved about him too,” she says, was that “you could tell he was out of his element. He wasn’t even necessarily interested in getting the gig,” or being famous. “He was into having experiences and adventure, and this was a mysterious adventure to him.” Cloud was ultimately cast as Fezco, the affable drug dealer on Sam Levinson’s “Euphoria.” Fez became a beloved stoner figure: a preternaturally sage loner with Earth-blue eyes, a brush-fire beard and a gaptoothed grin that gave his face a somewhat mischievous quality. He had the vibe of a Gen-Z Alfred E. Neuman, Mad Magazine’s impish icon. On “Euphoria,” a show about the emotional chaos and bacchanalian exploits of a group of California high school students, Fez was the show’s most compassionate presence. He had gravitas; he was an anchor amid the teenage existential fog and weed haze. In a memorable scene opposite Maude Apatow, who plays Lexi, Cloud mumbled Ben E. King’s “Stand by Me” in a slightly slurred sotto voce, offering a subtle and earnest friendship request between tokes. His raspy vocal stylings underlined the “nonactable” trait Venditti coveted in him — he was just a regular person at his core; someone who put in real effort but wasn’t trying too hard. “I was so proud that he was genuinely himself,” his friend Tia Nomore, an actress and fellow Oaklander said, of Cloud’s work on “Euphoria.” He played a version of someone viewers already knew, a “guy” (that euphemism for a pot dealer) or just a guy, a searching soul with eyes Venditti described as “joyful” and “kind.” She said, “There was something about him that you were like, ‘Should I be intimidated by him, or am I falling in love with him?’” Cloud’s authenticity was most pronounced in his speech, which Nomore told me was the elemental Bay Area dialect. Cloud spoke in a quarter-time cadence, a patois of enduring patience. His favorite phrase was a vaguely Marleyan “Bless, bless, one love.” “I think the way someone speaks tells you something about who they are, the rhythm of it,” Venditti says. “He was very relaxed, very chill, very present.” His meditative nature was complemented by an appreciation for everyday escapades: “engaging in any random, spontaneous activity,” hopping fences at Bay Area zoos or “skateboarding or smoking around,” Nomore told me, or doing graffiti. Cloud’s graffiti tags are up throughout Oakland. Mind the gaps among the letters of his handle (an all-caps “Angus”) and you get the tiniest impression of what it was like to be him, hopscotching on buildings, getting his name up on walls. He was an outsider’s prodigy, a quick study of life’s surprises. In 2013, when he was 14 or 15, a mishap changed his life. He was walking around downtown Oakland one day and somehow fell into a pit in a construction site. He told an interviewer he woke up 12 hours later, at the bottom of the hole. “I was trapped. I eventually climbed out after — I don’t know how long. It was hella hard to climb out because my skull was broken, but my skin wasn’t, so all the bleeding was internal, pressing up against my brain.” The fall produced the scythe-shaped scar that was visible in Cloud’s signature buzz cut. It also marked him in other ways. He was left with a brain injury that caused severe headaches. Lisa Cloud, his mother, has said that her son started taking opioids after the accident, as a way to manage his pain. Cloud’s life was short, and he became famous very fast; there’s an eerie disconnect between Cloud’s easeful way of being and his cometlike trajectory. Fez was supposed to die in both seasons of “Euphoria,” but Levinson found Cloud too “special” to cut ties with; besides, the character was also a fan favorite. He was on his way up — a rising star. But “he became a shooting star,” Hendricks says. “You can’t hold it; it doesn’t last forever.” Niela Orr is a story editor for the magazine. She recently wrote about female rappers and the Rolling Loud festival in Miami. Sally Kempton B. 1943 Kempton B. 1943 She was a feminist journalist covering the counterculture — then gave it all up to follow a guru. In 1970, Sally Kempton published an article in Esquire that was the talk of the New York literary world. Since the 1960s, Kempton had been writing sardonic dispatches from the underground scene for The Village Voice, describing in one an antic show by the Velvet Underground at Andy Warhol’s bar. She was an elegant, wiry blonde, a sharp-tongued chain-smoker and, according to one friend, an “object of erotic obsession” for many men. So when her essay came out — with its furious, tormented account of growing up as a young woman trained to be pleasing to men and deny her own intellect, it piqued interest. The essay’s tone is at once superior and pained; for many women at the time, it captured their contradictory feelings. “Once during my senior year in high school I let a boy rape me (that is not, whatever you may think, a contradiction in terms),” Kempton wrote. “Afterward I ran away down the stairs while he followed, shouting apologies which became more and more abject as he realized that my revulsion was genuine, and I felt an exhilaration which I clearly recognized as triumph. By letting him abuse me I had won the right to tell him I hated him; I had won the right to hurt him.” Unsparing toward herself, she reserved her most cutting observations for the men closest to her. She described how her father, the renowned journalist Murray Kempton, had molded her like Pygmalion to soothe his own fragile ego. She charged her husband, Harrison Starr, a film producer, with thoughtlessly putting his career before hers. At night, she fantasized about smashing his head in with a frying pan. She didn’t dare, not because she was afraid of hurting him but because “I was afraid that if I cracked his head with a frying pan he would leave me.” Kempton’s marriage ended soon after. Her father was ultimately more forgiving, but the tensions between them simmered for years. With a new boyfriend, Kempton began experimenting with LSD and meditation, seeking relief from persistent anxiety. A few years after the Esquire essay was published, she walked into a room where Swami Muktananda, an Indian master of Siddha yoga who was just bringing his practice to the United States, was leading a meditation. As soon as Kempton entered his presence, she later wrote, “I opened my eyes to a world scintillating with love and meaning.” She dropped everything to join Muktananda (who was also called Baba, meaning “Father”) on his tour across the country, took vows of poverty and celibacy, became a vegetarian and meditated for hours each day. She began living in the guru’s ashrams in upstate New York and in India, where she stayed in a dormitory with 40 other women, her few belongings stowed under her bed. Among the New York literary set, Kempton’s monastic turn was a source of wonder and occasional derision. After years of rigorous training, Kempton was initiated as a monk, was given the name Durgananda (which means bliss of the divine mother) and began dressing in red from head to toe. She stayed on for decades — during which Muktananda’s organization became a multimillion-dollar enterprise — teaching, editing the guru’s books and, after he died, supporting his female successor. A longtime friend, the writer Sara Davidson, interviewed her in 2001 and found Kempton unchanged in certain aspects from the sophisticated woman with the wicked sense of humor whom she remembered. But Kempton had a new equanimity and a palpable sense of joy — what she described as the “juicy, vibrant feeling” that was her primary state of being. When Davidson expressed her surprise that Kempton would surrender herself so thoroughly to the authority of a male guru, Kempton replied that she, too, had considered this: Was she running away from her problems by handing power to someone outside herself? But she eventually realized that her relationship with Baba allowed her to tap into the great love that undergirded all life. “I wasn’t in a state of surrender,” she told Davidson. “I was practicing surrender.” Her devotion to her guru was complete. When Muktananda was accused of sexual misconduct toward female acolytes, Kempton stood by him, dismissing the accusations as “laughable” and “ridiculous” in a 1994 New Yorker investigation. (Muktananda did not publicly deny the claims.) In later interviews, Kempton was more circumspect, saying she really couldn’t know what happened. She was also close friends with, and publicly defended, Marc Gafni, a charismatic teacher who was dogged throughout his career by accusations of sexual misconduct. An ordained rabbi, he was renounced in 2015 by more than 100 leaders of the Jewish community and founded a center for spirituality, where Kempton taught. (Gafni says the claims were false, the result of a smear campaign.) When I asked her brother David Kempton about her capacity to accept Gafni, he told me that Kempton held a very broad spiritual perspective, believing that “everything is ultimately a manifestation of the divine in some way.” That didn’t mean that everything was good, he explained, but she was holding “the complexity of the world and all its realities and at the same time holding a space of compassion and love.” Worldliness and transcendence, desire and mastery of desire: These were some of the paradoxes of meditative practice that Kempton wrote about and embodied. She was the author of several books, one of which, “Meditation for the Love of It,” is considered a classic guide. Her writing on meditation has the snap and intimacy of her early journalism, but all archness has been scrubbed away, and what’s left is a voice that is curious and confiding. “Meditation is like any other intimate relationship: It requires patience, commitment and deep tolerance,” she writes. “Just as our encounters with others can be wondrous but also baffling, scary and even irritating, our encounters with the self have their own moods and flavors.” In the early 2000s, Kempton left the ashram. She was ready to rejoin the world and resume her old name. She became a beloved meditation teacher for tens of thousands of students. “She took the discipline and depth of ashram life and brought it to yoga studios across the U.S. and Europe,” Tara Judelle, a former student of Kempton’s and a spiritual teacher, told me. “She was like Jacques Cousteau of the meditative world.” In a video on her YouTube channel, Kempton sits in her Carmel Valley, Calif., home, talking in a stream about her ardent striving for self-knowledge, a striking woman with graying hair and twinkling eyes. She is witty and accessible, even when speaking about inner mysteries. “I’m really interested,” she says, smiling, with no trace of her old irony, “in full enlightenment.” Sasha Weiss is a deputy editor for the magazine. She has written about Janet Malcolm’s unsparing journalism, Justin Peck’s choreography and Judy Chicago’s feminist art. Louise Glück b. 1943 Glück b. 1943 The Nobel-winning poet was pitiless to herself, yet fiercely generous toward her students. She stood barely five feet tall — slight, unassuming, you had to stoop low to kiss her cheek — but whenever Louise Glück stepped into a classroom, she shot a current through it. Students stiffened their spines, though what they feared was not wrath but her searing rigor: Even in her late 70s, after she won the Pulitzer and the National Humanities Medal and the Nobel, she always spoke to young writers with complete seriousness, as if they were her equals. “My first poem, she ripped apart,” says Sun Paik, who took Glück’s poetry class as a Stanford undergraduate. “She’s the first person whom I ever received such a brutal critique from.” Mark Doty, a National Book Award-winning poet who studied under Glück in the 1970s at Goddard College, felt that she “represented total authenticity and complete honesty.” This, he recalls, “pretty much scared me half to death.” Spare, merciless, laser-precise: Glück’s signature style as a writer. It was there from an early age. Born in 1943 to a New York family of tactile pragmatists (her father helped invent the X-Acto knife), Glück, a preternaturally self-competitive child, was constantly trying to whittle away at her own perceived shortcomings. When she was a teenager, she developed anorexia — that pulverizing, paradoxical battle with both helplessness and self-control — and dropped to 75 pounds at 16. The disorder prevented her from completing a college degree. Many of the poems Glück wrote in her early 20s flog her own obsessions with, and failures in, control and exactitude. Her narrators are habitués of a kind of limitless wanting; her language, a study in ruthless austerity. (A piano-wire-taut line tucked in her 1968 debut, “Firstborn”: “Today my meatman turns his trained knife/On veal, your favorite. I pay with my life.”) In her late 20s, Glück grew frustrated with writing and was prepared to renounce it entirely. So she took, in 1971, a teaching job at Goddard College. To her astonishment, being a teacher unwrapped the world — it bloomed anew with possibility. “The minute I started teaching — the minute I had obligations in the world — I started to write again,” Glück would confess in a 2014 interview. Working with young minds quickly became a sort of nourishment. “She was profoundly interested in people,” says Anita Sokolsky, a friend and colleague from Williams College, where Glück began teaching in 1984. “She had a vivid and unstinting interest in others’ lives that teaching helped focus for her. Teaching was very generative to her writing, but it was also a kind of counter to the intensity and isolation of her writing.” Glück’s own poems became funnier and more colloquial, marrying the control she earlier perfected with a new, unexpected levity (in her 1996 poem “Parable of the Hostages”: “What if war/is just a male version of dressing up”), and it is her later books, like the lauded “The Wild Iris” from 1992, that made her a landmark literary figure. Teaching also coaxed out a new facet in Glück herself: that of a devoutly unselfish mentor, a tutor of unbridled kindness. A less fastidious writer and thinker may have made their teaching duties rote — proffering uniformly encouraging feedback or reheating a syllabus year after year. Glück, though, threw herself into guiding pupils with the same care and intimacy she gave to her own verses. “There was just this voraciousness, this generosity,” says Sally Ball, who met Glück while studying with her at Williams and remained close with her for the three decades until her death. “Every time I moved, she put me in touch with people in that new place. She enjoyed bringing people to know each other and sharing the things she loved.” And as a teacher, Ball says, “Louise was really clear that you have to make yourself change. You can’t just keep doing the same things over and over again.” In that spirit of boundless self-advancement, Glück also taught herself to love cooking and eating. She once hand-annotated a Marcella Hazan recipe and mailed it to Ball, with sprawling commentary on how best to prepare rosemary. “She’s very beautiful and elegant, right,” Ball says, but “we’d go to Chez Panisse and sit down and she eats with gusto. It’s messy, she’s mopping her hands around on the plate.” Paik recalls spending hours each week decoding Glück’s dense, cursive comments on her work. “I was 19 or 20,” she says, “writing these scrappy, honestly pretty bad poems, and to have them be received with such care and detail — it pushed me to become a better writer because it set a standard of respect.” “She was 78, and whenever she talked about poetry, it felt like the first time she’d encountered poetry,” says Shangyang Fang, who met Glück when he was at Stanford on a writing fellowship. Glück offered to edit his first poetry collection, and the pair became close friends. “She would talk about a single word in my poem for 10 minutes with me,” Fang says. Evenings would go late. They cooked for each other sometimes, spending hours talking vegetables and spices, poetry and idle gossip. “By the end, I couldn’t thank her enough, and she said: ‘Stop thanking me! I am a predator, feeding on your brain!’” Amy X. Wang is the assistant managing editor for the magazine. She has written about the rise of superfake handbags, dogsitting for New York City’s opulent elite and the social paradox of ugly shoes. Rachel Pollack B. 1945 Pollack B. 1945 Her life as a trans woman gave her insight into the transformative power of tarot. There are at least as many stories about the origins of tarot as there are cards: They represent the secret oral teachings of Moses, the Egyptian Book of Thoth, a hidden resource created by a papermaking guild of Cathars. But Rachel Pollack — considered by many, at the time of her death, to be the world’s greatest expert on the cards — always refused esoteric theories. “The more I think about it,” she wrote, “the more it appeals to me that these are playing cards.” Tarot decks have four suits that run from ace to king, but also include 22 illustrated “trump” cards, among them Death, Temperance, The Lovers. Over time, players imagined new uses for the cards, radically expanding the field of play to include occult interpretation, artistic elaboration and fortunetelling. Today, tarot is more popular than ever. For many, Pollack’s “78 Degrees of Wisdom” is their first guide to the cards, a best seller first published in 1980. Pollack moves nimbly across a staggering range of mythic traditions in her interpretations of the cards, but she has always been an antischolastic kind of scholar: “We can use tarot,” she argued, “to remove the pins that hold down all those other traditions,” to reveal heretical connections between them. Common wisdom teaches us to play the cards we’re dealt, but tarot reshuffles the formula: What if the meaning and function of these cards is not fixed — if we can change the game by deepening our powers of perception? Pollack was born in 1945 to a middle-class Jewish family in Brooklyn. In her early years, they moved to Poughkeepsie, N.Y., where her father supervised a lumberyard and her mother worked as a secretary at IBM. When Rachel was 8 or 9 — already harboring an intense desire “to wear girls’ clothes, and somehow, in some vague way, be thought of as a girl” — she suffered chronic nightmares that only relented when her great-grandmother told her mother “to place a small Jewish prayer book” beneath her mattress. The image of the sacred text, hidden from consciousness but nonetheless active, would become a touchstone for Pollack’s philosophy of knowledge. “All myths have their uses,” she later wrote, referring to social constructs like gender and the clock alongside the theologies of organized religions and ancient cults. But she would be the one to choose among them in the moment, to entertain those that extended her sense of life’s possibility, and break down those that aspired to the status of doctrine. The year 1971 was a crucible for Pollack: the year she first encountered tarot, and the year she came out as a woman and a lesbian. She didn’t look to the cards for reassurance: “I found I had no interest in any predictions.” Instead, she was fascinated by each symbol as “a frozen moment in a story” that only she could mobilize. She began to publish science fiction under her chosen name; she joined the Gay Liberation Front in London; she pursued surgical transition in the Netherlands long before such procedures were common practice. But for Pollack, transition was never about achieving “a desired end result” or making “a sensible life choice.” She assumed the position of tarot’s Fool, dancing on the cliff’s edge, driven by “the passion of experience” over and against what she called the “Empire of Explanation.” Rachel Pollack joins a line of major figures in tarot history who led queer lives: Pamela Colman Smith, illustrator of the world-famous Rider Waite; and Alesteir Crowley, mastermind of the Thoth deck. The writer Alexander Chee, who is gay, and befriended Pollack while they were both teaching creative writing at Goddard College, told me that queerness and occultism are “about learning that the world is not the way we are told it is.” Chee remembers Pollack draped in amulets, like a “small-town librarian who is secretly a witch of great power.” She was always careful, however, to remind us that her power wasn’t “special” — we all have the opportunity to derive wisdom from our desires and disenchantments. But even when we try to formulate original questions, we often find ourselves following familiar scripts: “Will my mother accept me as I am?” “Should I leave my husband?” Pollack would often encourage readers to surrender their questions to the cards, to begin by asking: “What do I need to know now?” Chance — so often an agent of tragedy and chaos — can also surface difficult insights. In writing this remembrance, I shuffled the deck and drew a card in her honor: Death. For Pollack, Death symbolized “the precise moment at which we give up the old masks and allow the transformation to take place.” But “those are ideas,” she would say, insisting we find our own meaning in the image, particular to the moment of our reading. “What do you see ?” I see: a black flag, a white rose, a child whose future we still have time to save. Carina del Valle Schorske is a writer and translator living in Brooklyn. Her first book, “The Other Island,” is forthcoming from Riverhead. Her essay for the magazine about New York’s plague-time dance floors won a National Magazine Award. Sandra Day O’Connor B. 1930 Day O’Connor B. 1930 The first woman on the Supreme Court had little interest in the feminism of her era. In 1970, Sandra Day O’Connor ran to keep her seat in the Arizona Senate on a brief and bland platform: “Good government. Efficiency.” At 40, she could reap the local connections she made in the previous decade, when she volunteered for the Junior League of Phoenix, a women’s social club, while she took care of her three young sons and her husband built his career at a prominent law firm. Running as a Republican, O’Connor distanced herself from the feminist movement that was beginning to change (and threaten) middle-class America. “I come to you with my bra and wedding ring on,” she assured an audience at the Rotary and Kiwanis Clubs in Phoenix. Two years later, O’Connor rose to Senate majority leader and had to manage the debate in Arizona over whether to ratify the Equal Rights Amendment, the state-by-state effort to enshrine sex equality in the Constitution. O’Connor’s conservative political allies, including her friend Senator Barry M. Goldwater, opposed the E.R.A. In the Judiciary Committee of the Arizona Senate, O’Connor supported sending the amendment to the floor for a vote by the full chamber. But when she lost in committee, she shifted. O’Connor proposed, as an alternative to the E.R.A., taking off the books hundreds of Arizona statutes that discriminated against women in remarkably direct ways, like requiring a wife to have the permission of her husband to buy a car. When she first joined the Legislature, she tried to eliminate such provisions and got nowhere, according to “First,” a biography by Evan Thomas. But now O’Connor saw that her colleagues were ready to make laws gender neutral, and a bill to amend more than 400 of them passed easily. Eight years later, after O’Connor became a state appellate judge, President Ronald Reagan had his first chance to nominate a Supreme Court justice. O’Connor had no experience in federal law. But Reagan campaigned on a promise to choose the nation’s first female justice. O’Connor’s soothing moderation endeared her to the powerful men advising the president. Goldwater called Reagan on her behalf. Justice William H. Rehnquist, O’Connor’s classmate from Stanford Law School and former beau, put in a word for her. When the attorney general sent two staff members to Phoenix to vet O’Connor in late June 1981, she read the room correctly and said she found abortion personally repugnant — without tipping her hand on how she would vote on the issue — and then pulled off an elegant salmon mousse for lunch on a hot summer’s day. The Senate confirmed O’Connor unanimously that September. One Republican hope for her was that she would be able to assemble unlikely coalitions for conservative outcomes, a counterpart to Justice William J. Brennan Jr., the liberal known for masterminding winning majorities. But O’Connor became the most powerful justice of her era for a different reason. She was the fifth vote — the one who could dictate the reasoning, in case after case, because she was essential. O’Connor disliked the term “swing justice.” She didn’t oscillate from right to left so much as hew to her idea of the middle. “Anthony Kennedy swung from one side to the other with enthusiasm,” says the Stanford law professor Pamela Karlan, speaking of O’Connor’s fellow Reagan appointee. “She would split the difference.” O’Connor offered no grand theory. She concentrated on the facts of each case, giving herself room to tack in a different direction the next time. She cared about the impact of her rulings, but sometimes her approach vexed nearly everyone. In 1992, in Planned Parenthood v. Casey, O’Connor helped reaffirm the core of Roe v. Wade by joining a majority that adopted a test she came up with years earlier: The state could regulate abortion as long as a law did not impose an undue burden on a woman’s abortion decision. Justice Antonin Scalia pounced on the vagueness of the standard — what counted as “undue”? — calling it a “shell game.” Over time, abortion rights advocates became frustrated, too, as courts permitted restrictions even when they diminished access to abortion in large areas of the country. In the 1990s and 2000s, as conservative and liberal justices increasingly outlined and debated competing and overarching philosophies — originalism, living constitutionalism — O’Connor did not join them or change her ways. “Appropriately for a pragmatist,” says John Harrison, a law professor at the University of Virginia, “she felt confident about being one without feeling the need to provide a theoretical justification for her pragmatism.” Justice Stephen G. Breyer, who retired last year, aligned with O’Connor in closely considering the potential impact of a ruling. “We might not have always agreed, but we did have a similar outlook,” he said, putting two other justices from bygone eras, John Marshall and David H. Souter, in the same category. “Sandra was a person who tried to get other people to get together, to be practical, to understand that consequences matter, to understand that the court is one of our governing institutions — not the only one and not always the most important one — that’s part of how we keep 300-something million people together. She understood that.” Photograph: National Portrait Gallery, Smithsonian Institution; gift of the Portrait Project, Inc. Emily Bazelon is a staff writer for the magazine and the Truman Capote fellow for creative writing and law at Yale Law School. Rosalynn Carter B. 1927 Carter B. 1927 She fell in love with him at 17 but worried that marriage would waylay her dreams. Three miles lie between this life and another, between their two houses, hers in downtown Plains, Ga., and his family farm in the country surrounded by peanuts planted in red clay. Three miles between the ordinary and extraordinary. As a girl, she — Rosa Smith — sometimes runs away, just across the street to play with the neighbor boys, to feel what it would feel like: escape, freedom, adventure. She reads “Heidi” and “Robinson Crusoe.” She knows there must be something bigger out there. When her daddy is diagnosed with leukemia at 44, she’s 13, the oldest of four children. That’s when she’ll say her childhood ends. She takes his wishes seriously, though: She will go to college. She will try not to cry. Some years later — four to be exact, during World War II — she notices Jimmy Carter for the first time. He’s just a picture on the wall at her friend Ruth’s farmhouse, away at the Naval Academy now. Rosa’s clan are better bred and more educated than the Carters, but the Carters are what you call individualists. Their mama, Lillian, comes and goes as a nurse from every home, regardless of skin color. Jimmy’s daddy, Earl, has built some sort of clay tennis court out there, puts all kinds of spin on the ball when he beats his son. The family sits and reads during dinner, sometimes not even talking to one another. Imagine that: elbow to elbow, their noses in books, Jimmy reading “War and Peace.” Shy and introverted, Rosa can’t stop thinking about that picture on the wall, that uniformed Carter boy with the sweet smile who’s broken the force field and gone far away. He comes home on leave, in the summer of 1945. He’s 20, she’s 17. Ruth conspires to have them meet, at a cleanup after some local event. Jimmy kids Rosa when she makes sandwiches with mismatched pieces of bread and salad dressing instead of mayonnaise. She feels, for the first time, that she can talk — really talk — to a boy, that there’s something serious but giving about him. Later, after saying goodbye, she goes to a youth meeting at church, and a car pulls up, and suddenly Jimmy Carter is walking across that lawn as if he’s in a movie scene, asking if she’d like to double-date with him, his sister and her date. They ride in the rumble seat, and years later neither will remember what movie they saw, but there was a full moon and a kiss to remember because it was not like Rosa Smith to let a boy kiss her on a first date. When people will come to think of Jimmy and Rosalynn Carter’s 77-year marriage, which will take place almost exactly a year from that full moon, they will think of two as one, as a soldered single entity, never at odds, compatibly twinned shoulder to shoulder building houses or trying to eradicate Guinea worm in Africa or abiding their God at church in Plains. It will perhaps seem that Rosalynn has always been eclipsed by the public face — and later, the faint saintly glow — of Jimmy Carter. That Jimmy Carter was bound for his successes no matter who was behind him or by his side. Nothing could be further from the truth. When the Carter boy proposes marriage at Christmas — not long after those two atomic bombs have been dropped on Japan — she says no at first. Valedictorian of her high school class, she promised her daddy college. Love, yes, but no begins the real negotiation. Terms and expectations. And compromise: She earns a junior college degree, then joins her new husband as a Navy wife, bouncing from coast to coast, even to Hawaii. They have a boy, and another, and eventually another. She’s in charge while he’s on subs or working on base. When Earl dies and Jimmy is called back to run the farm, she cries most of the car ride home. That’s how she remembers it, crying and yelling, too. Go back, what for? She’s so livid on that ride that she talks to Jimmy only through her oldest son, Jack. Jack, would you tell your fath-ah. … Disagree as they might, their ferment and conflict proliferate into a full equal partnership. She does the books at the warehouse, explains the inner workings of their peanut business to him: how much money they have, how much credit, their next best move. When he runs for local office, she begins calling everyone in the county, straight off the voter list. He runs for governor, she goes everywhere: talks to people in fire stations and Kmarts. Rides in shrimp boats, goes up in a hot-air balloon, attends a rattlesnake roundup. People — including family — say Rosa, the shy introvert, is the political mastermind. He wants to do what’s right. She wants to win. He has 2 percent name recognition entering the 1976 presidential race. She drives all over Florida, pointing herself at radio antennas, walks into each station and says, Would you like to interview the candidate’s wife? She travels to Iowa, New Hampshire, anywhere they need her, sponging the hurts and desires of America and then telling Jimmy words to say. Within another year, she’s in Washington on a cold winter morning, fussing over her hair and worrying over her 9-year-old, her youngest child, Amy, while Jimmy practices reading his Inaugural Address aloud. It’s a rough-and-tumble ride, the presidency. For her it’s like crossing the street as a child with no idea what might be found on the other side. She adores the adventure of each day, the hard-knuckled politics of what comes next. She’s the first first lady to create her own office in the East Wing. She sits in on cabinet meetings, another first. She furthers her mental-health agenda, becomes an envoy to Central America. The press nicknames her Mrs. President, calls her Steel Magnolia. Go back and watch the clips of Morley Safer of “60 Minutes” challenging her husband’s abilities, as she smiles sphinxlike at some barbed question and offers a bromide — there’s no one better than Jimmy Carter to run this country — while the glitter in her eye says, I would like to punch your nose, Mr. Morley Safer. Her husband is described as a mix of tenderness and detachment, as the historian Garry Wills says, his demeanor a “cooing which nonetheless suggests the proximity of lions.” She is the lion. When they come back to Plains — again — after four years, she is more disappointed than he. (“I’m bitter enough for both of us,” she quips to staff on Election Day when someone remarks on Jimmy’s equanimity.) There were so many programs in place, so much more to realize — it crushes her to see Ronald Reagan undo it all — and she believes, will always believe, that no one truly understood that the world was a better place with Jimmy Carter as president. He never brought his country to war; his priorities were human rights and the environment. Of course, their last act becomes their most important: Each will say maybe it was a blessing to have four more years to add to the decades of work — from peace negotiations to disease eradication — at the Carter Center. And the public collects images of them growing old together, in each other’s company, shoulder to shoulder, circling the globe on their various missions, returning to Plains, which becomes their comfort and sanctuary, a place where they go biking or fishing together, where they collect their little miracles of small-town life. They still argue and bicker. A book they try to write together nearly ends their marriage. But by the end of each day, they’ve reconciled. She does tai chi while he reads. They tell people that some space — everyone sets their own distance — can do wonders for a marriage. They bury her at a willow tree by a pond on the property where they’ve lived together these 43 years since the White House, roughly midway between Rosa Smith’s childhood house and Jimmy Carter’s farm out in those red clay fields. Michael Paterniti, a contributing writer for the magazine, is at work on a book about the discovery of the North Pole. Top carousel image credits: Mark Borthwick (Sinead O’Connor); Michael P. Smith/Gift of Master Digital Corporation, via The Historic New Orleans Collection (Tina Turner); Kendall Bessent (Angus Cloud); Diana Walker/Getty Images (Rosalynn Carter); Bela Zola/Mirrorpix, via Getty Images (Burt Bacharach).